Multimodal Graph-of-Thoughts: How Text, Images, and Graphs Lead to Better Reasoning

Brad Nikkel

There are many ways to ask Large Language Models (LLMs) questions. Plain ol’ Input-Output (IO) prompting (asking a basic question and getting a basic answer) works fine for most simple questions. But we often want to ask LLMs more complicated questions than IO prompting can handle.

Chain-of-Thought (CoT) prompting, encouraging LLMs to decompose a complex task into small steps, works well for tasks requiring a bit of logic (e.g., a short Python script or math problem). By adding to CoT the ability to explore different independent thought paths via a tree structure, Tree-of-Thoughts (ToT) can handle even more complicated tasks. A “thought” in ToT (a LLM-outputted text) is only connected to a directly preceding or directly subsequent thought within a local chain (a tree branch), which limits the cross-pollination of ideas. Building on the fusion of classic data structures and LLMs, Graph-of-Thought (GoT) adds to ToT the ability for any "thought" to link to any other thought in a graph.

Allowing any thought to link to any other thought likely models human thinking better than CoT or ToT—in most cases. Sure, you might occasionally generate a series of thoughts closely resembling a chain or a tree (e.g., developing contingency plans or standard operating procedures might fit this bill). More typically, though, our noggins, blissfully free of sequential constraints, produce thought patterns more reminiscent of a tangled web than a neat, symmetric tree or chain. Across a two-part article series, we’ll look at two different approaches to GoT. In this article, we’ll look at a multimodal (text + images) approach (by Yao et al.), and in a later article, we’ll check out a pure language approach (by Best et al.).

Multimodal Graph of Thoughts

A graph-ish thought process that we often employ involves mapping different senses to one another. The phrase "The sun also rises," for example, might nudge your mind toward Hemingway or to Ecclesiastes or, perhaps, along the direction of pure symbols (i.e., words) like "hope," "orange," or "tomorrow.”

But it might just as well evoke vivid imagery of places etched into your mind: perhaps a foggy sunrise you savored a few days back or a high school English lecture you enjoyed (or suffered through) decades ago. Memories beget memories, each mapping to further scents, sounds, textures, and tastes that spur further concepts, memories, and thoughts to well to the surface.

Our senses, knowledge, and memories weave together a tangled tapestry of thoughts. Attempting to mimic and harness this phenomenon, Shanghai Jiao Tong University and Wuhan University researchers Yao et al. designed an LLM-powered multimodal GoT model. They designed their four-staged architecture to tackle multiple-choice questions with more "reasoning" than a pure LLM might muster. To see how it works, let’s investigate each step of their architecture.

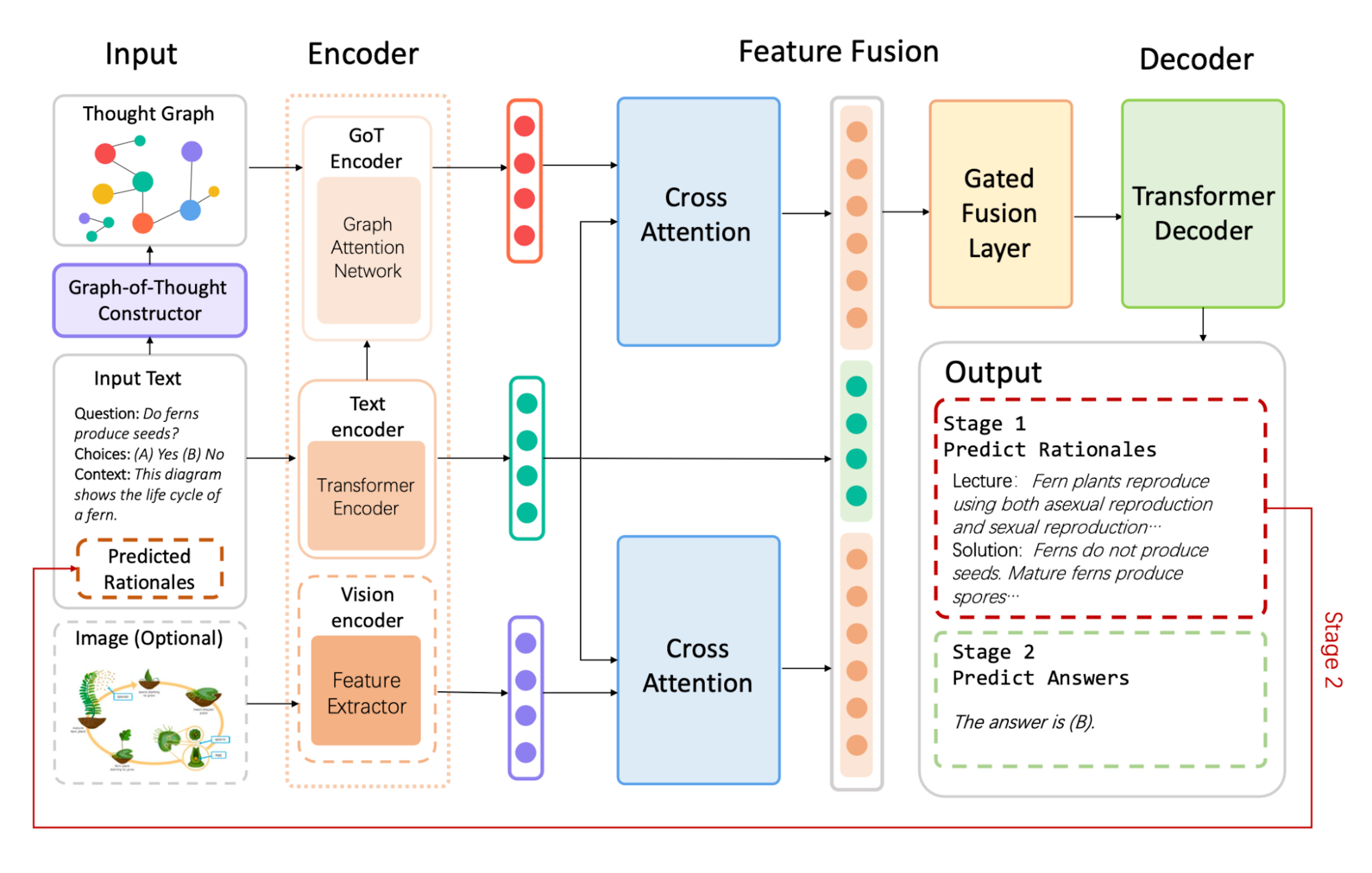

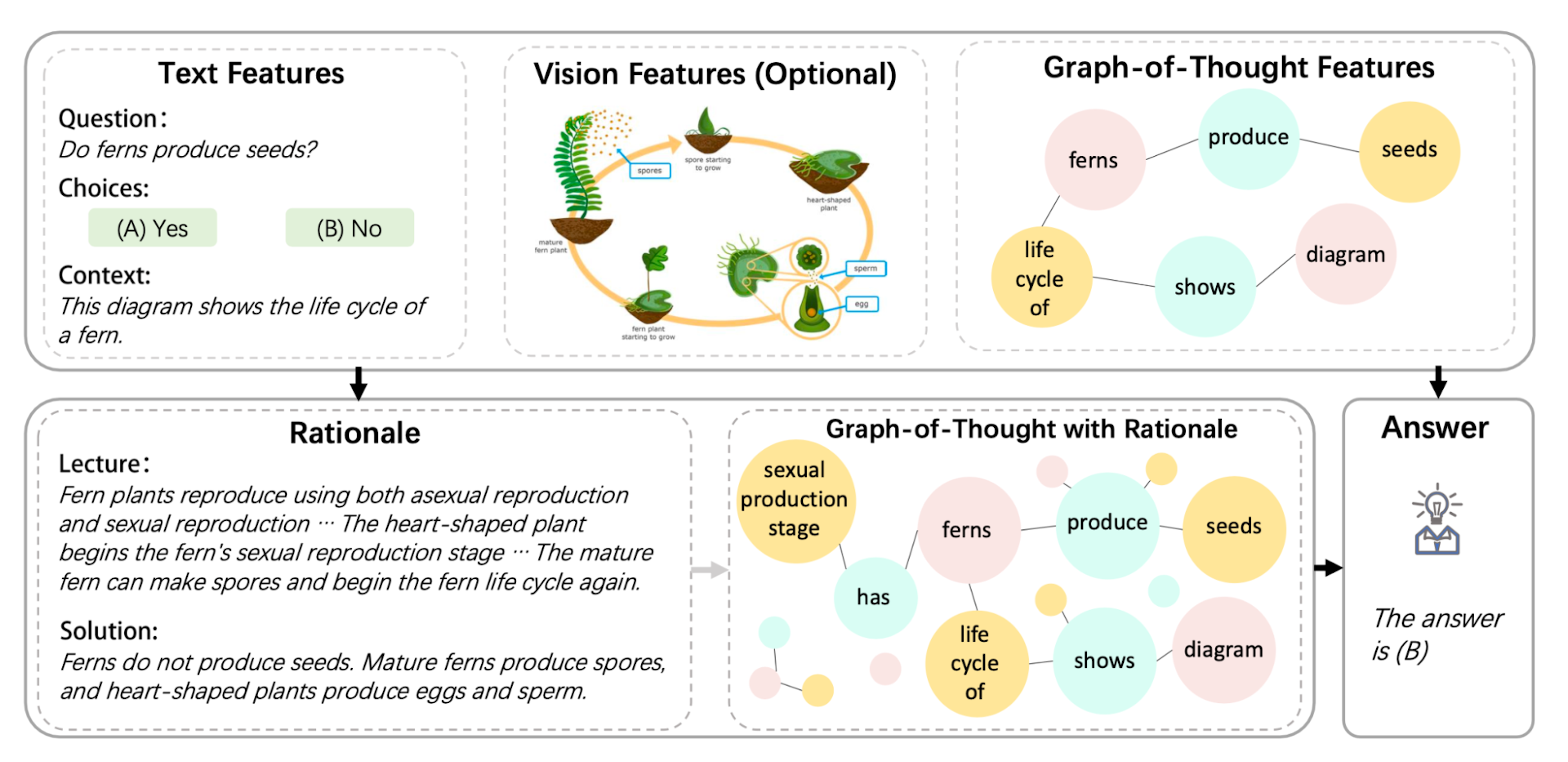

Image Source: Yao et al. (Yao et al.'s GoT Architecture)

Image Source: Yao et al. (Yao et al.'s GoT Architecture)

Input Some Text (and an Optional Image)

First, you feed their model text (a math or science question, some context, and multiple candidate answers) and, optionally, an image and its caption. The text (question) is fed to a transformer-based text encoder and a "GoT Constructor" constructor, which produces a rough "thought graph" (derived from the text) that’s then fed to a Graph Attention Network; the image is fed to a vision encoder. Each modality's encoder (text, image, and graph) is independent of the other.

Machine-Generated Rationale

Since the image and text encoders that Yao et al. used are fairly vanilla (they used UnifiedQA, a fine-tuned T5 model, for text and DETR for images), we’ll focus on Yao et al.’s more novel contribution, a "GoT constructor," which generates graphs of "thoughts" designed to help their overall system reason its way toward a final answer.

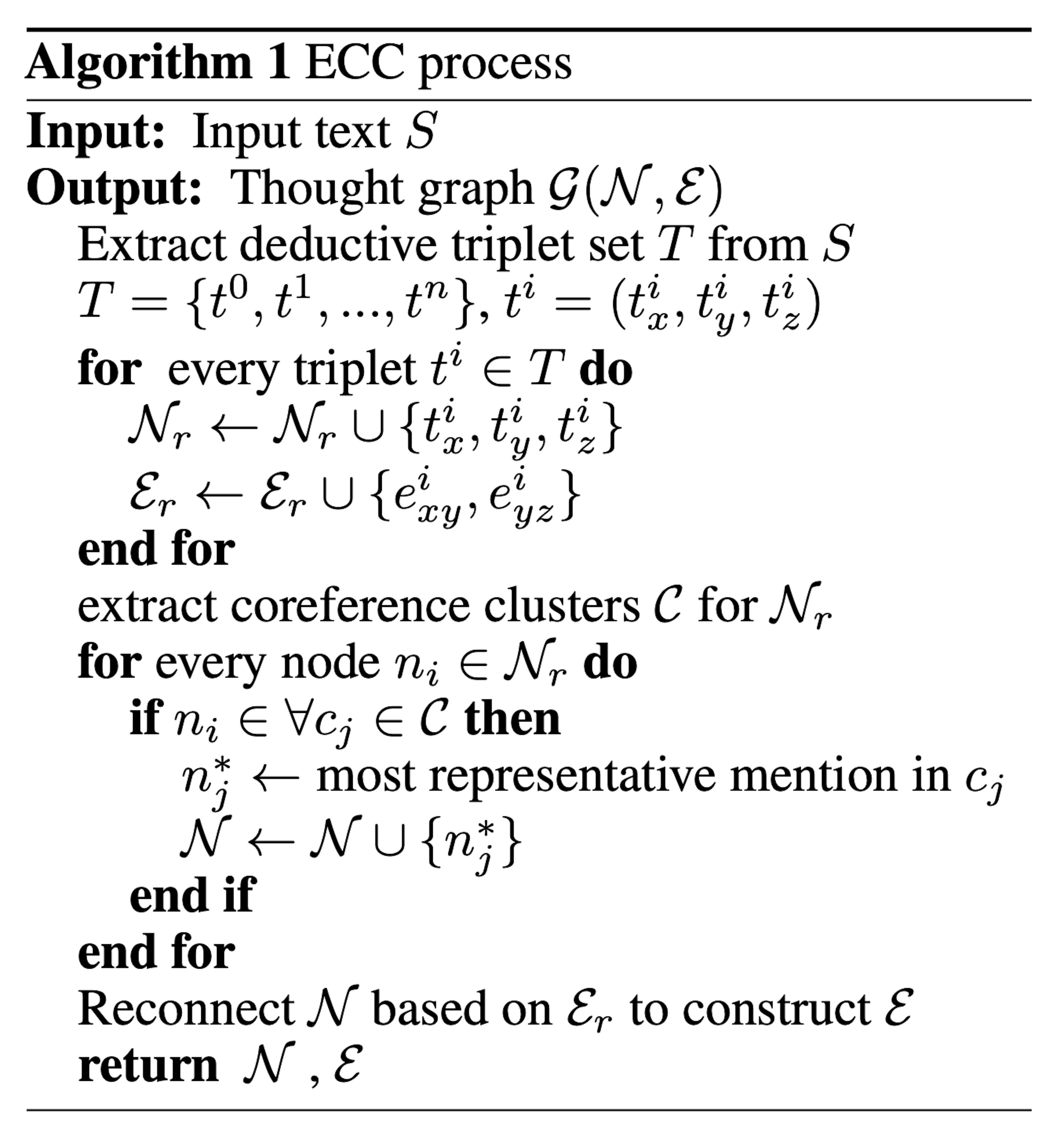

Extract-Clustering Coreference (ECC)

To build graphs of disparate "thoughts," Yao et al. developed an Extract-Clustering Coreference (ECC) system that takes in text and breaks it down into chunks of related thoughts. The ECC merges thoughts that refer to the same entities and then forms a rudimentary "thought graph" from these deduplicated thoughts. Yao et al. believed such knowledge graphs might help LLMs’ improve basic deductive reasoning like the transitivity of implications (e.g., if A implies B and B implies C, then if follows that A also implies C).

To do all this, the ECC first uses Stanford's Open Information Extraction to extract subject-verb-object triplets (also called semantic triplets).

("You," "are reading," "a Deepgram article."), for example, is such a triplet. Most texts are rife with these triplets, and when you throw them all together, they can get noisy enough to warrant simplifying. You can see why this is by considering the following triplets:

("Forrest", "started running from", "Greenbow, Alabama")

("Mr. Gump", "jogged to", "Monument Valley Navajo Tribal Park")

("Monument Valley Navajo Tribal Park", "is located in", "Utah")

("Utah", "is a long run from", "Alabama")

We can trim these up a bit to make things easier for the machines. To do this, Yao et al. used Stanford's CoreNLP toolkit to perform "coreference resolution" (merging together different words that refer to the same entity).

Feeding triplets 1 and 2 (from the example above) to a coreference resolution algorithm might infer that the strings "Forrest" and "Mr. Gump" refer to the same entity, "Forrest Gump.” Likewise, coreference resolution might also infer that "running" and "jogging" are similar enough actions to map to the same meaning. So, suppose we end up with:

("Forrest Gump", "ran from", "Greenbow, Alabama")

("Forrest Gump", "ran to", "Monument Valley Navajo Tribal Park")

With these slightly cleaned-up triplets, the model might then deduce a few things.

IF ("Forrest Gump", "ran to", "Monument Valley Navajo Tribal Park") AND IF ("Monument Valley Navajo Tribal Park", "is located in", "Utah"):

THEN ("Forrest Gump", "ran to”, "Utah").

Given the above, we get:

IF ("Forrest Gump", "ran from", "Greenbow, Alabama") AND IF ("Forrest Gump", "ran to", "Utah") AND IF ("Utah", "is a long run from", "Alabama"):

THEN ("Forrest Gump", "ran", "far").

If you want more details, here's the pseudocode for Yao et al.'s ECC process:

Image Source: Yao et al.

Image Source: Yao et al.

From the above, we can see how a group of deductive triplets might accumulate into a rudimentary yet useful knowledge graph, but how does a machine know how to make sense of these knowledge graphs?

As is often the case with ML problems, we need to represent these as vectors of numbers so machines can work with them. First, each item in a deductive triplet (a string) is represented as a node, and relations between each of these nodes are represented as edges.

Such nodes and edges are represented in an adjacency matrix, a square matrix where ones represent connected nodes (at that row-column pair) and zeros represent non-connected nodes. Each node’s text is also embedded (represented as vectors of numbers).

All this information is then fed to a Graph Attention Network Encoder (GAT), which helps machines learn what portions of the knowledge graph are most worth paying attention to. It does this by learning ideal attention weights between neighboring nodes, which help the GAT figure out what nodes to focus on and what nodes to skim.

Fusing Modalities

After encoding each modality independently (text, graph, and, optionally, image data), Yao et al.'s system fuzes each type of information. To do this, their system first uses single-headed attention to weigh how the graph and image embeddings ought to align with the text embeddings.

Imagine you have information about a crime: several pages of notes (text), a collection of images, and a basic graph of known suspects', victims', and witnesses' relations to one another. If you were a pre-personal-computer-era detective crafting an evidence board, you might first pull out facts from the report and, where possible, line those facts with supporting images and relations from the graph. Single-headed attention serves a similar purpose, matching non-text information (in this case, image and graph data) to relevant text segments.

After single-headed attention aligns the modalities, the next step is figuring out how to craft an evidence board that's (somewhat) graspable at a glance. A detective might prominently display a few key pieces of the story while subduing or entirely leaving out irrelevant details.

A machine version of this that Yo et al. used, the “gated fusion mechanism,” similarly decides how much of each modality (text, image, graph) should feature in the final representation.

Prediction Phase

The final part of Yao et al.'s architecture involves inference components. First, a transformer decoder takes in the fused features from the previous step and generates a two-staged output:

Predict Rationales: a multi-step explanation of the model's "reasoning" process (drawing from the knowledge graph)

Predict Answers: based on the predicted rationales that the model generates, it then infers the most likely answer (from the constrained answer set given to the model in the initial input).

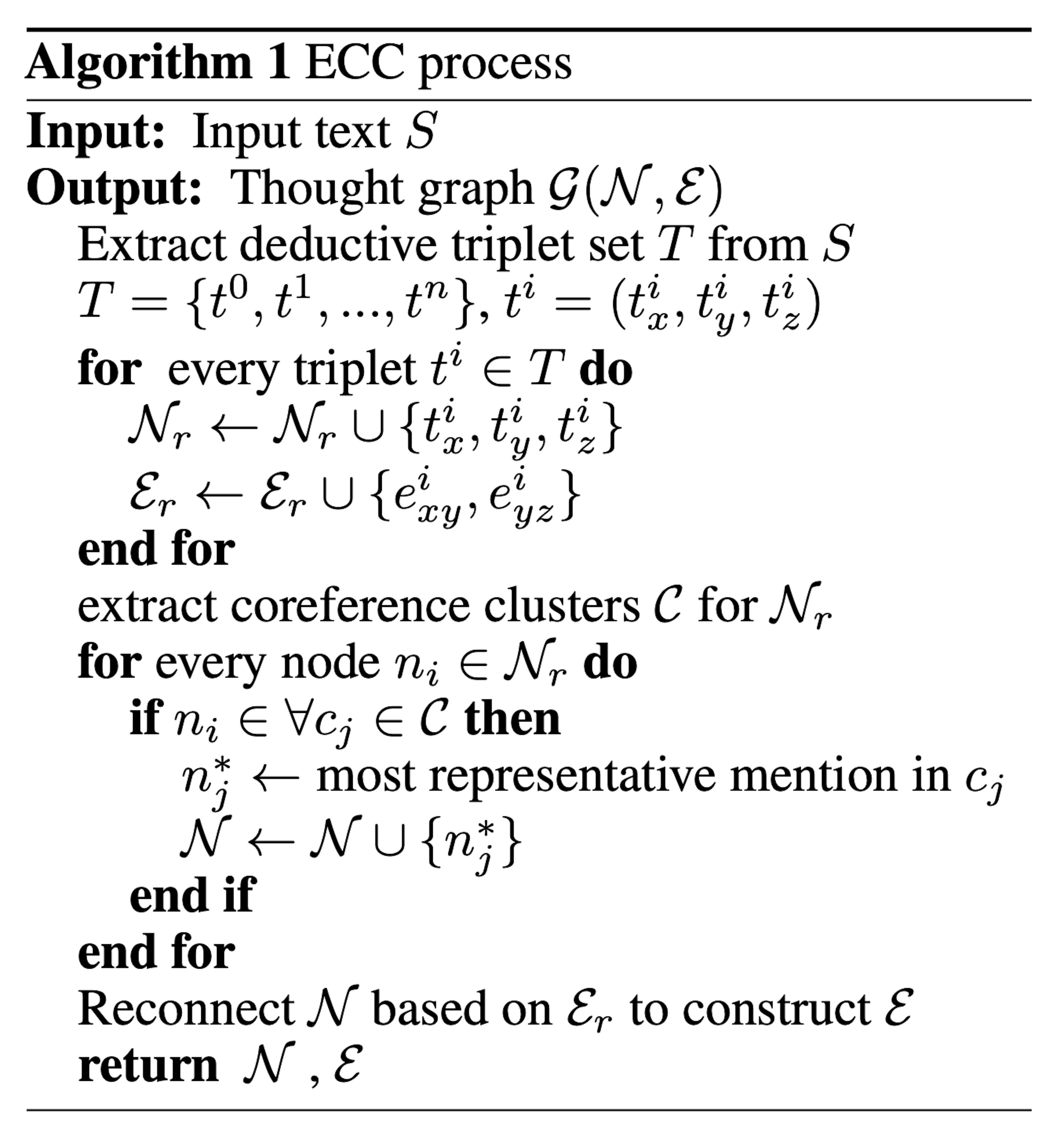

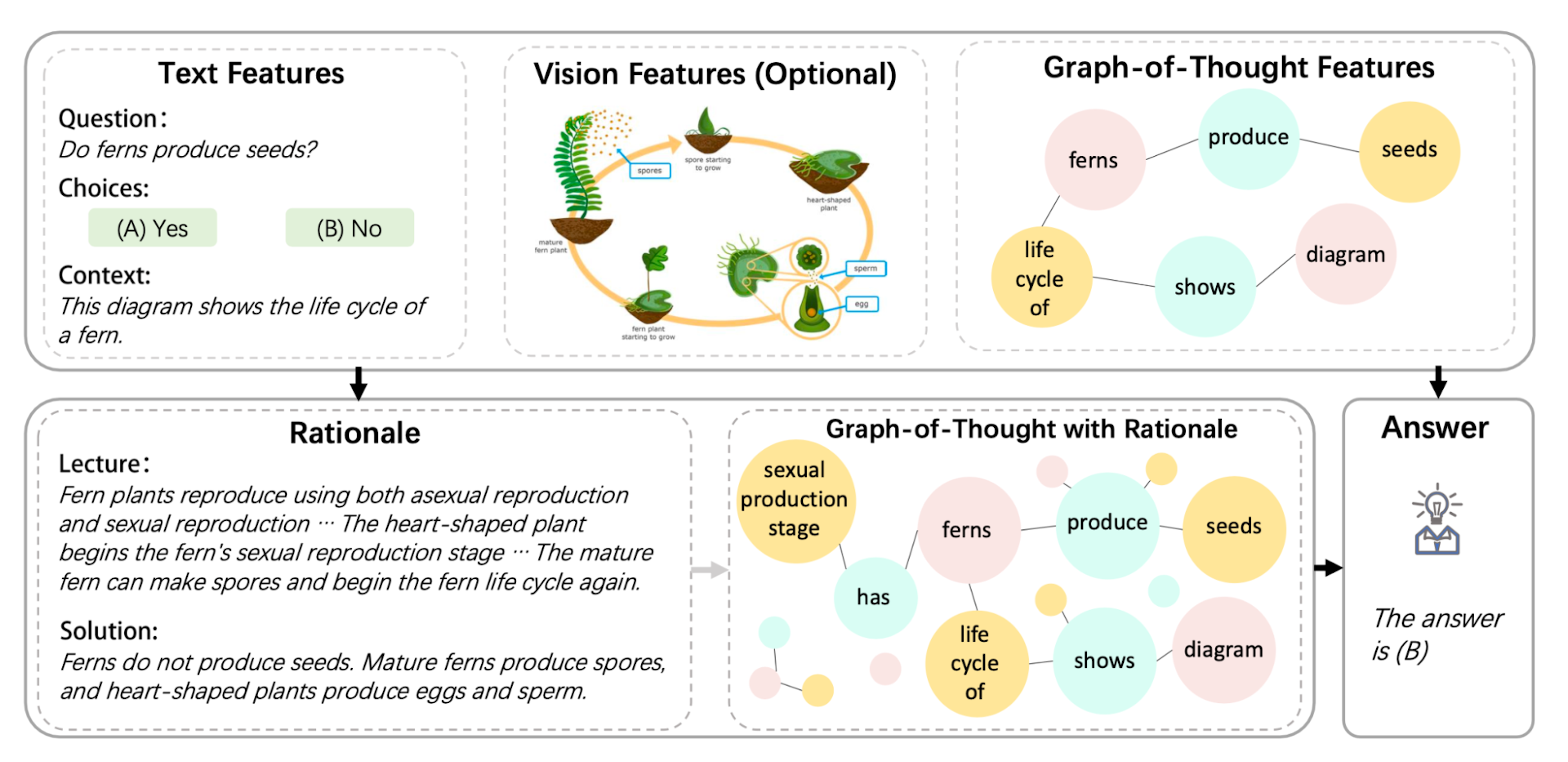

Below is an example question, image, graph, and predicted rationale and answer:

Image Source: Yao et al. (Example of GoT reasoning)

Image Source: Yao et al. (Example of GoT reasoning)

In its entirety, Yao et al.'s framework creates a reasoning path that leverages multimodal inputs (text and optional images) to simulate a more human-like, multi-sense problem-solving approach. The two-stage prediction phase is designed not only to spit out accurate answers but also to convey the model’s underlying reasoning process, an important step toward transparency and trust in AI decision-making. Now that we have an idea of the model’s moving pieces, let’s see how it fared.

Tests and Results

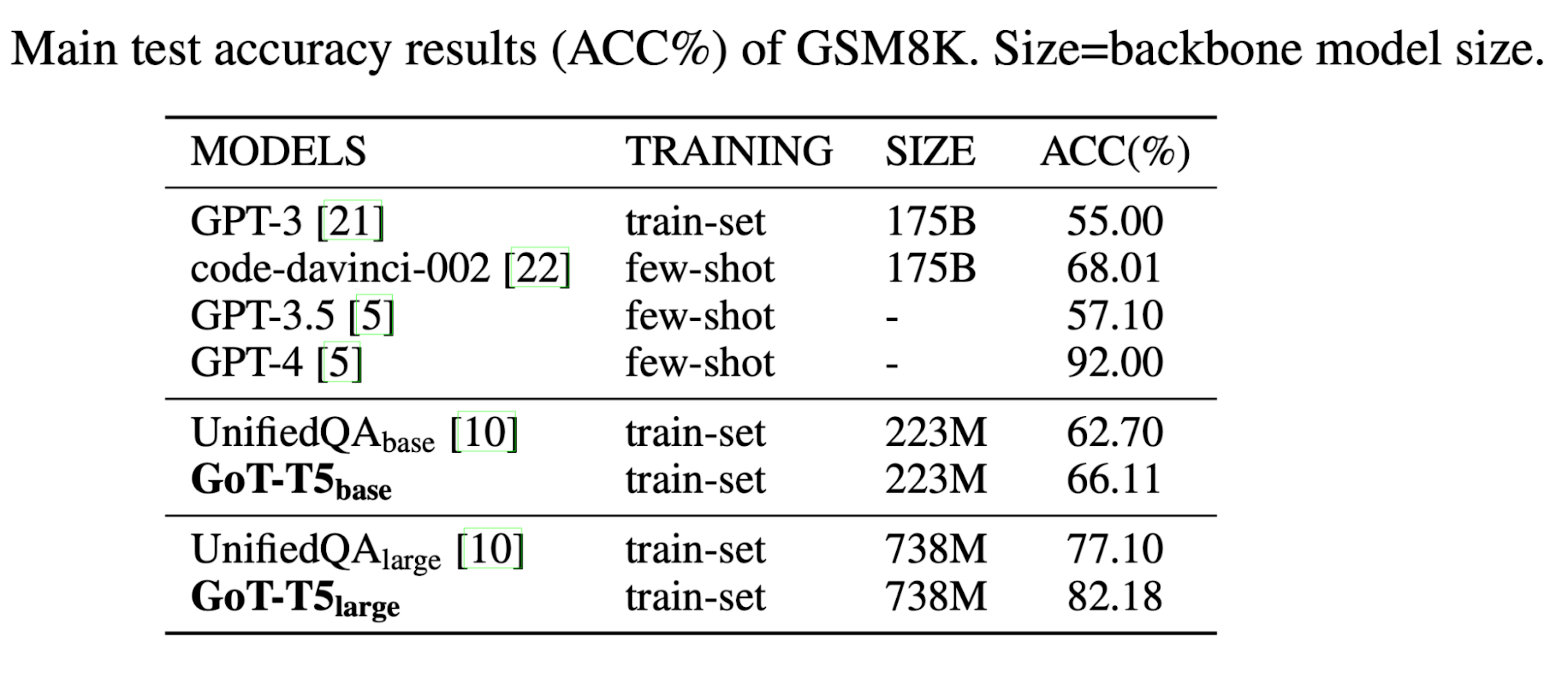

Yao et al. tested their model on the following:

GSM8K: a text benchmark comprised of 8.5k grade school math problems with accompanying multi-step solutions

ScienceQA: a multimodal benchmark made up of around 21k language, social, and natural science questions (all with text; some with image and text).

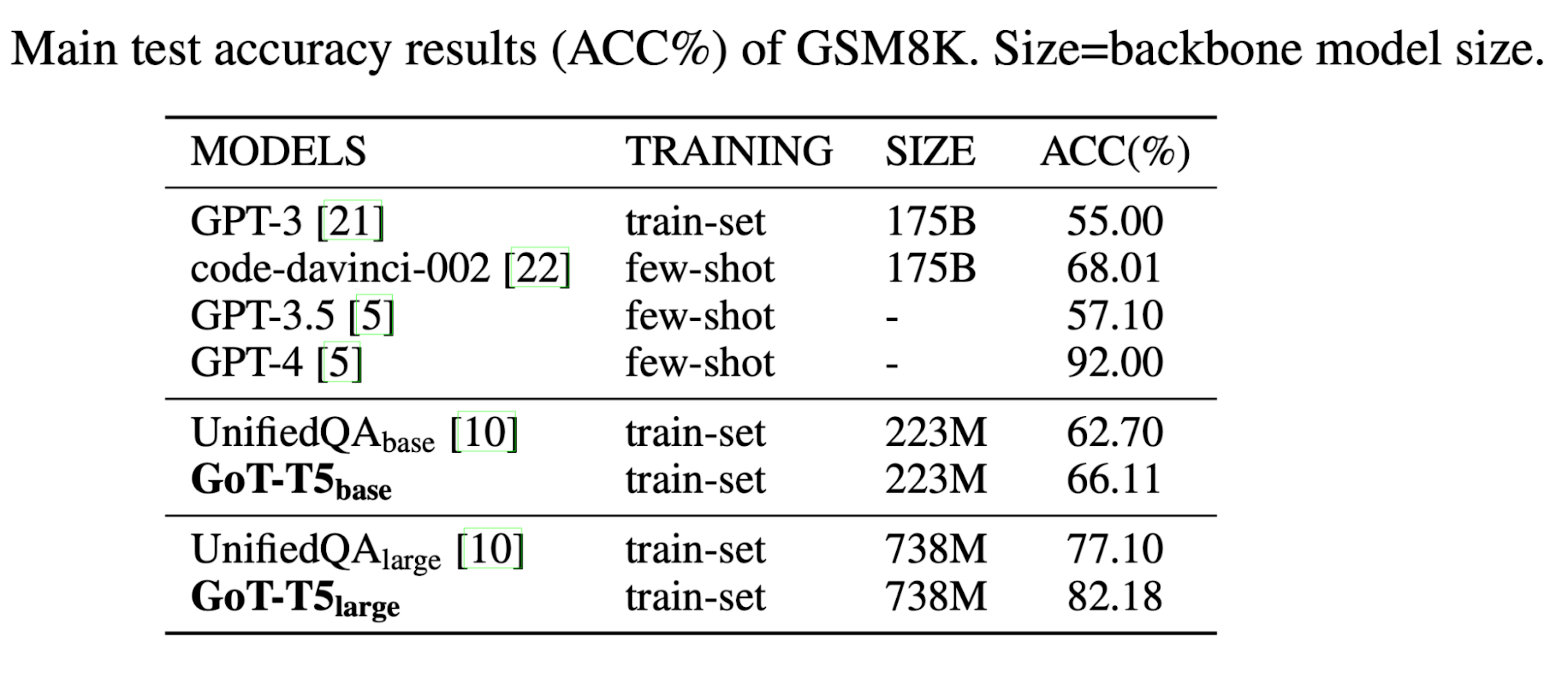

As baseline models for the text-only GSM8K benchmark, Yao et al. used GPT-3, 3.5, and 4, code-davinci-002, and UnifiedQA (a T5 model fine-tuned on GSM8K questions). Below is how the baseline models stacked up against Yao et al.'s GoT:

Image Source: Yao et al. (Note)

Image Source: Yao et al. (Note)

You can see that Yao et al.'s GoT-T5 base and large models performed significantly better than UnifiedQA base and large, respectively, and better than GPT 3 and GPT 3.5, despite GoT-T5 (base and large) having far fewer parameters than GPT 3 or GPT 3.5. GPT-4, however, performed better than both GoT-T5 models.

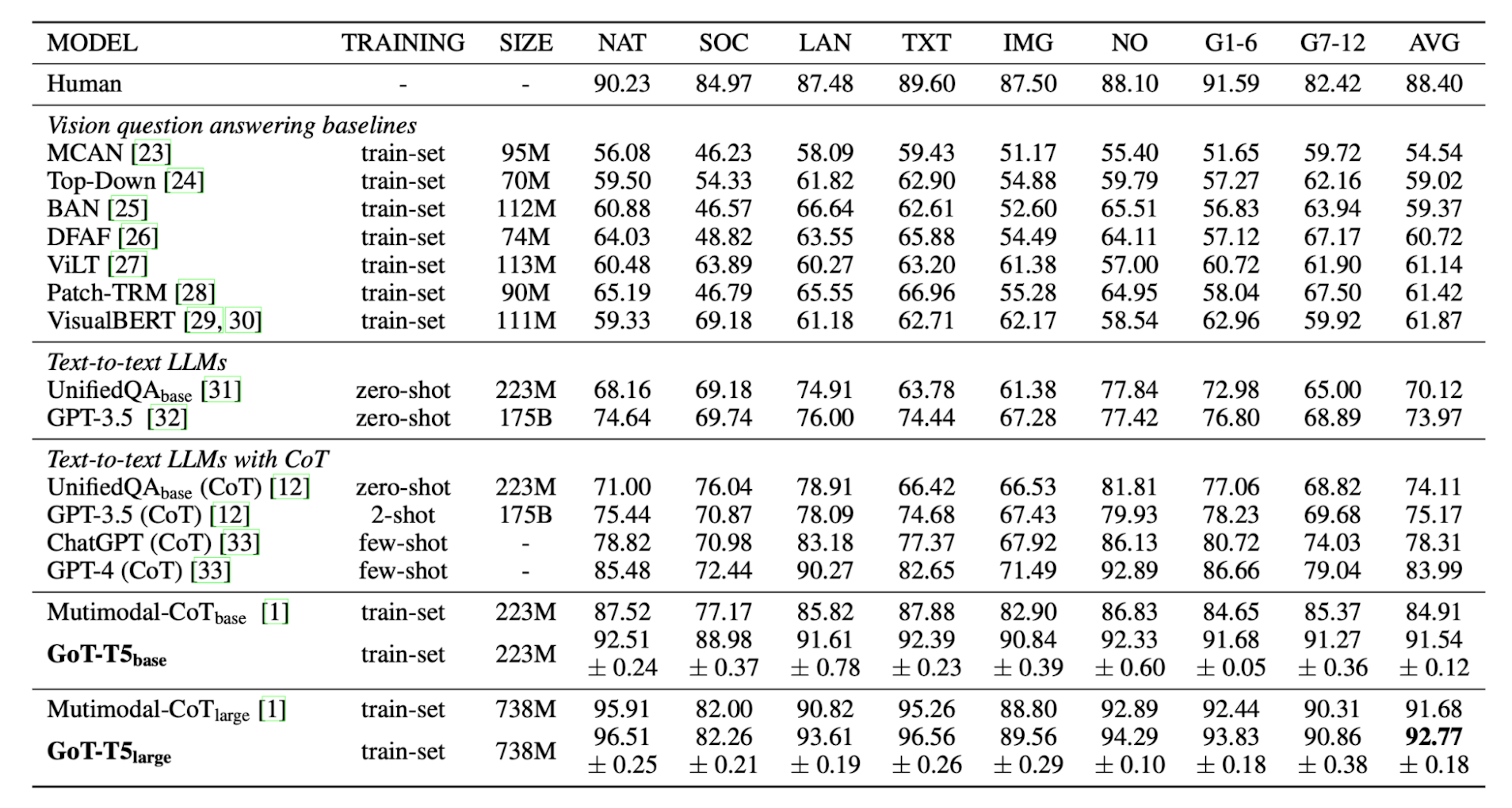

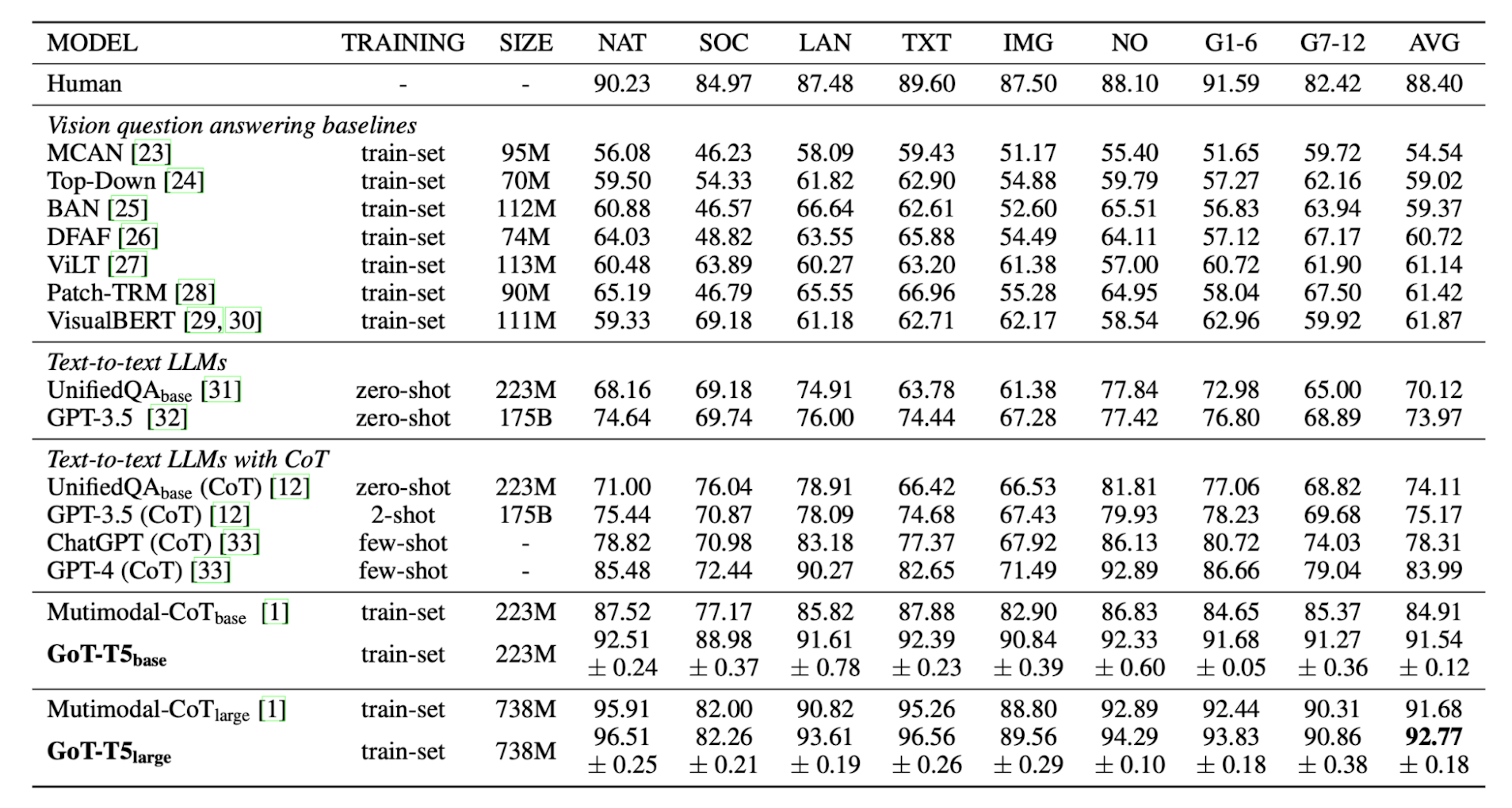

Yao et al. used a few different sets of baseline models for the ScienceQA multimodal benchmark:

a set of vision question answering models

a set of text-to-text LLMs

a set of text-to-text LLMs with CoT

a set of multimodal CoT models.

You can check the results below:

Image Source: Yao et al. (ScienceQA results. SIZE=parameters. NAT = natural science, SOC = social science, LAN = language science, TXT = text context, IMG = image context, NO = no context, G1-6 = grades 1-6, G7-12 = grades 7-12, AVG= average accuracy)

Image Source: Yao et al. (ScienceQA results. SIZE=parameters. NAT = natural science, SOC = social science, LAN = language science, TXT = text context, IMG = image context, NO = no context, G1-6 = grades 1-6, G7-12 = grades 7-12, AVG= average accuracy)

You'll notice that Yao et al.'s GoT-T5 base and large performed slightly better than Multimodal-CoT in every category of science questions and significantly better than the other baseline across most categories.

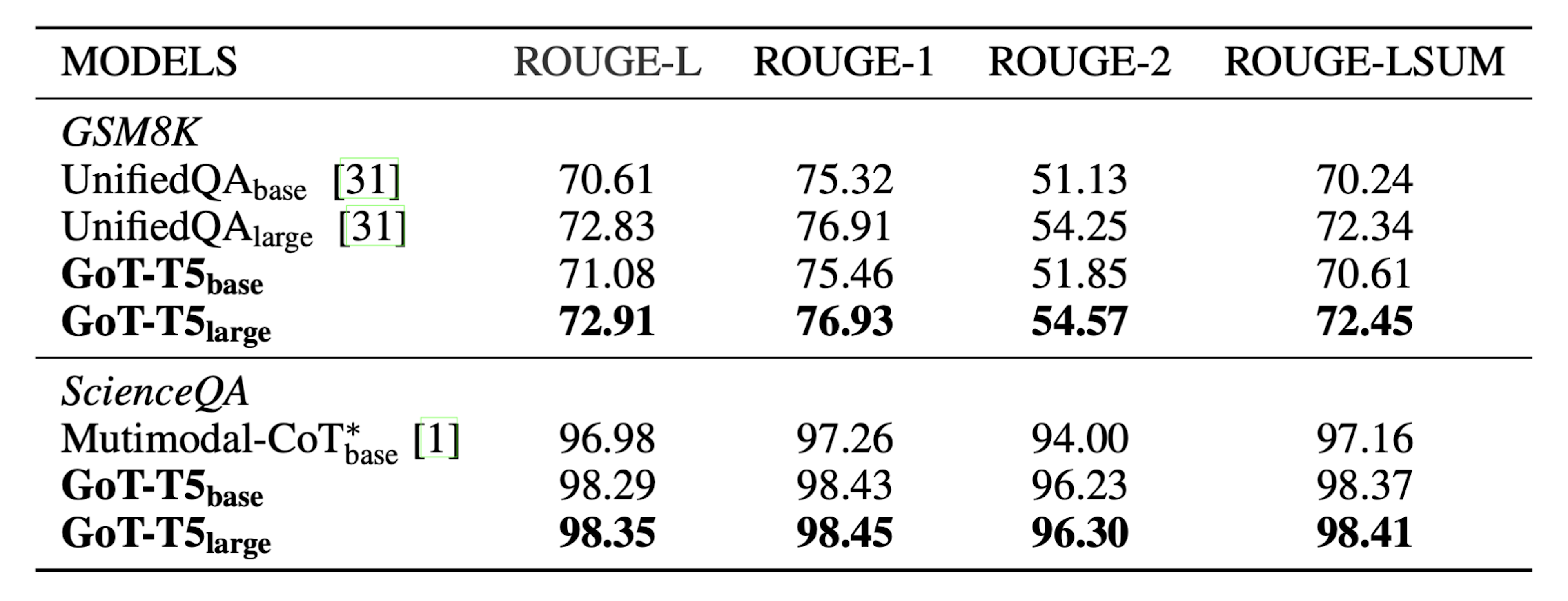

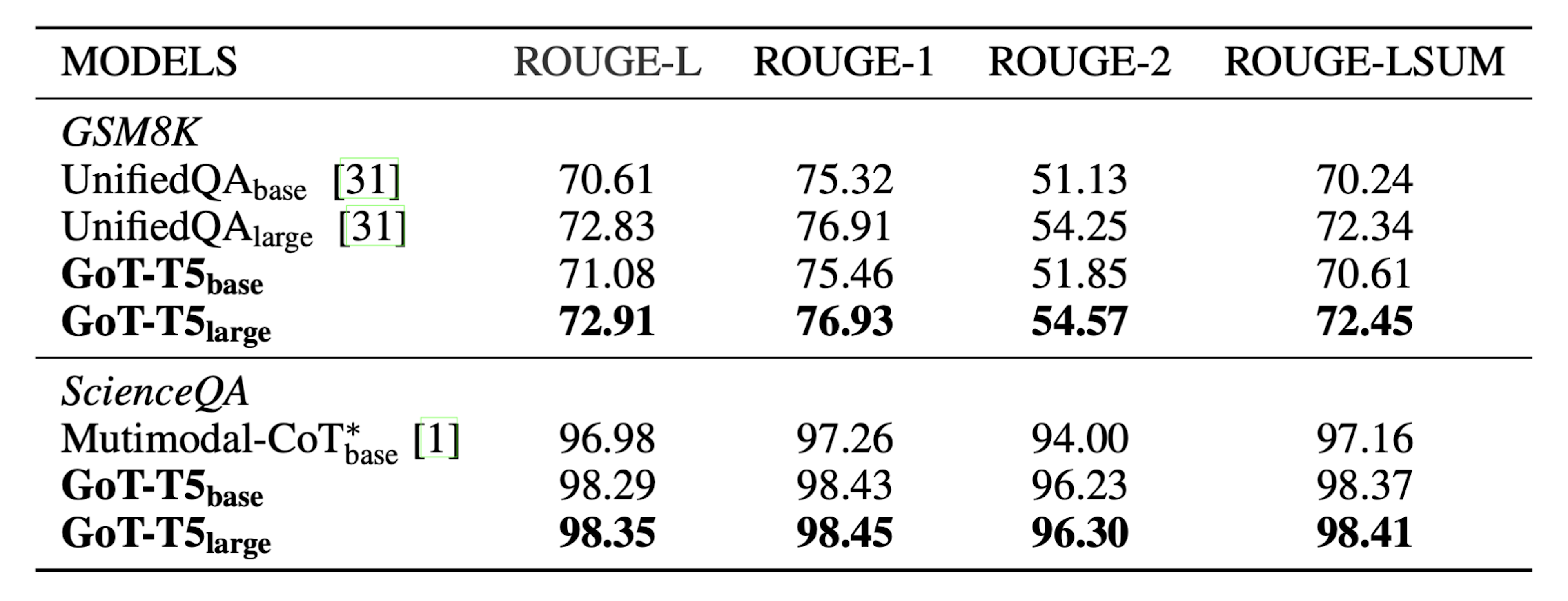

Yao et al. also got ROUGE scores for the baseline and GoT models generated rationales. These checked how similar the models' reasoning steps were to the reference answers’ reasoning steps. As you can see below, GoT slightly outperformed the baselines in ROUGE metrics:

Image Source: Yao et al. (ROUGE scores for GoT and baselines)

Image Source: Yao et al. (ROUGE scores for GoT and baselines)

Yao et al. believe their GoT models only generated slightly better rationales (compared to the baselines) because they didn't feed their models much information to build thought graphs with (only text from the GSM8K questions and their corresponding multiple choice answers). In other words, constraining the material their language models had available to "reason" with might have cramped their reasoning ability.

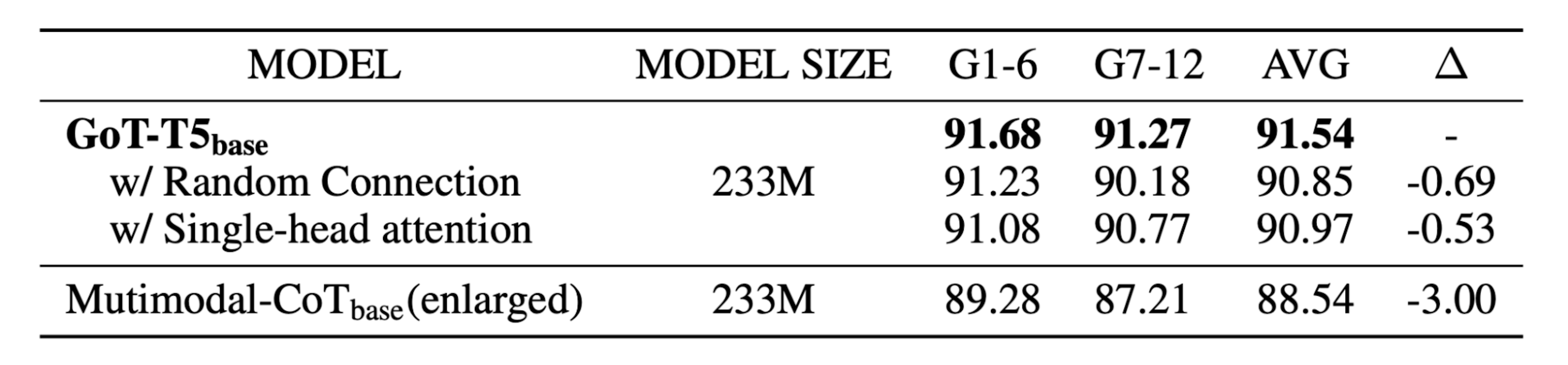

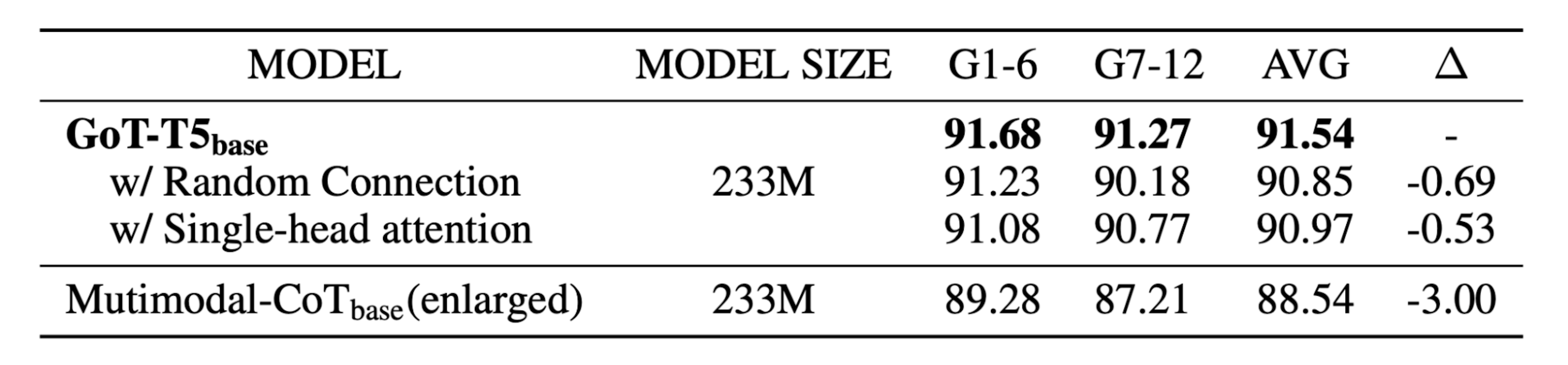

Did the Graphs Even Help?

Yao et al. performed a few ablation studies to get an idea of what exactly boosted their GoT models’ accuracy. One ablation was feeding their model a graph with random connections (instead of the graphs composed of deductive triplets they normally fed to their GoT models). If it was indeed the graphs that boosted their GoT model's accuracy (over to the baselines), feeding their GoT models randomly generated graphs ought to significantly reduce their accuracy compared to using a logical knowledge graph.

Image Source: Yao et al. (Ablation study. Note: Using a random graph for rationale generation didn't seriously degrade GoT’s accuracy.)

Image Source: Yao et al. (Ablation study. Note: Using a random graph for rationale generation didn't seriously degrade GoT’s accuracy.)

The random graph indeed lowered accuracy. Yao et al. saw this as indicative that the graphs boosted their GoT model’s performance. The random graph only degraded performance by less than one percent, however, making it unclear why Yao et al. claimed the graph component played such a crucial role in their GoT model's performance.

Pros and Cons of Yao et al.'s GoT Approach

Though Yao et al.'s GoT performed better than multimodal CoT at most of the tests they threw at it, their approach requires training or fine-tuning several components (text, vision, and GoT encoders), likely leading to increased training and inference costs compared to multimodal CoT. For applications where reasoning isn’t vital, GoT's meager accuracy boost might not be worth the extra time and compute costs might not make GoT worthwhile to implement compared to ToT or CoT variants.

Despite its increased complexity and costs, the multimodal GoT approach that Yao et al. experimented with here serves as a good base to build from. Further research might, for example, add more modalities into the mix or create more developed knowledge graphs to, in turn, generate better rationales for answering questions. Stay tuned for part two of our GoT series, where we’ll go over Besta et al.’s language-only approach.