Attention Mechanisms

In this article, we dive deep into the world of attention mechanisms, offering a comprehensive understanding that spans from their cognitive science roots to their implementation in cutting-edge deep learning models.

In this article, we dive deep into the world of attention mechanisms, offering a comprehensive understanding that spans from their cognitive science roots to their implementation in cutting-edge deep learning models. You'll discover how these mechanisms enable models to mimic human-like focus, enhance efficiency, and significantly improve prediction accuracy.

What are Attention Mechanisms in Deep Learning

At its core, the concept of attention mechanisms in deep learning revolves around the model's ability to focus on specific parts of the input data, much like how human attention works. This selective focus mechanism enhances model efficiency and accuracy, especially in complex scenarios where the relevance of input data varies significantly. Let's delve into the foundational aspects of attention mechanisms:

Mimicking Human Attention: Drawing parallels to human cognitive processes, attention mechanisms in deep learning models allow for a dynamic focus. This means models can prioritize parts of the input that are most relevant to the task at hand, ignoring less relevant information.

Enhancing Model Efficiency: By focusing on specific parts of the input, models can process information more efficiently. This not only speeds up the learning process but also improves the accuracy of predictions.

Evolution and Significance: The evolution of attention mechanisms has been significant in the field of deep learning. Initially inspired by cognitive science, this concept has grown in importance, paralleling advancements in AI and machine learning technologies.

Components of an Attention Mechanism: To understand how attention mechanisms work, it's essential to grasp its components - query, key, and value vectors. These components interact in a way that the model dynamically adjusts its focus based on the task, much like how we pay attention to different aspects of a scene based on what we're looking for.

Foundation in Cognitive Science: The initial inspiration for attention mechanisms came from understanding how selective attention works in humans. This abstraction from cognitive science into computational models has been a game-changer, allowing for more sophisticated and human-like processing by deep learning models.

In essence, attention mechanisms serve as a critical bridge between the vast data available to deep learning models and the need to focus on what's truly relevant. This not only mimics the human ability to prioritize but also significantly enhances the model's efficiency and accuracy. As we explore further, keep in mind the transformative potential these mechanisms hold for the future of AI and machine learning.

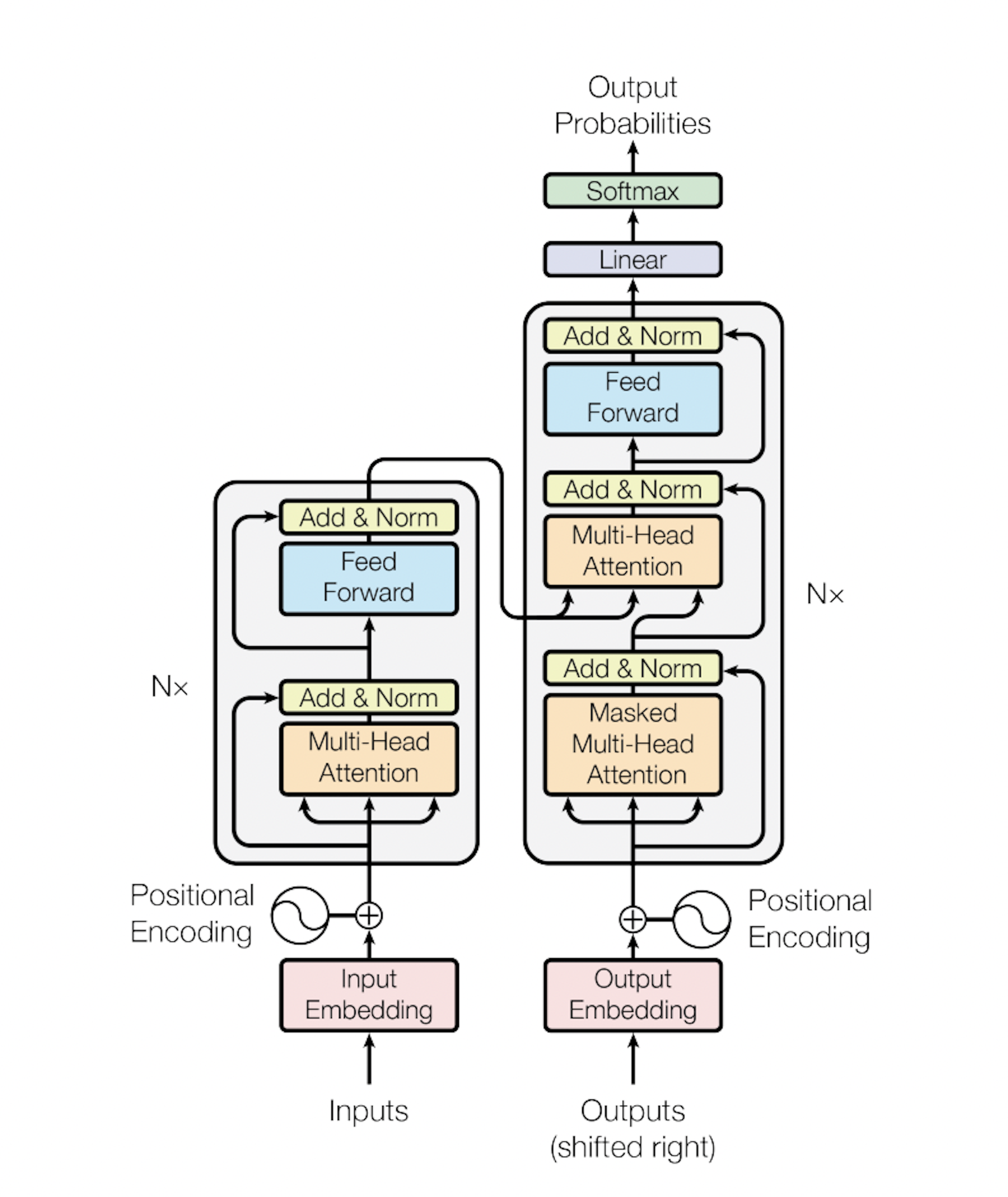

Transformer Model Architecture (Source)

How Attention Mechanisms Work

The Role of Weights in Focusing the Model

Attention mechanisms in deep learning employ a sophisticated system of weights to manage the model's focus on different parts of the input data. These weights are not static; they adjust as the model processes information, ensuring that the focus aligns with the most relevant aspects of the data. The process works as follows:

Assignment of Weights: Each part of the input data is assigned a weight that signifies its importance to the task at hand.

Dynamic Adjustment: As the model learns, it dynamically adjusts these weights based on feedback, refining its focus.

Impact on Model Performance: Proper weighting is crucial for model performance. Incorrectly focused attention can lead to misinterpretation of the input data, while a well-tuned focus enhances accuracy and efficiency.

Calculation of Attention Scores

The calculation of attention scores is pivotal in determining how the model prioritizes different parts of the input data. These scores are calculated using a blend of the input data and the model's current state, providing a numeric value that represents the relevance of each piece of data.

Use in NLP Tasks: In natural language processing tasks, for example, attention scores help the model decide which words or phrases are most important for understanding the meaning of a sentence or document.

Performance Impact: The precision of attention score calculation directly correlates with the model's ability to effectively process and understand complex inputs.

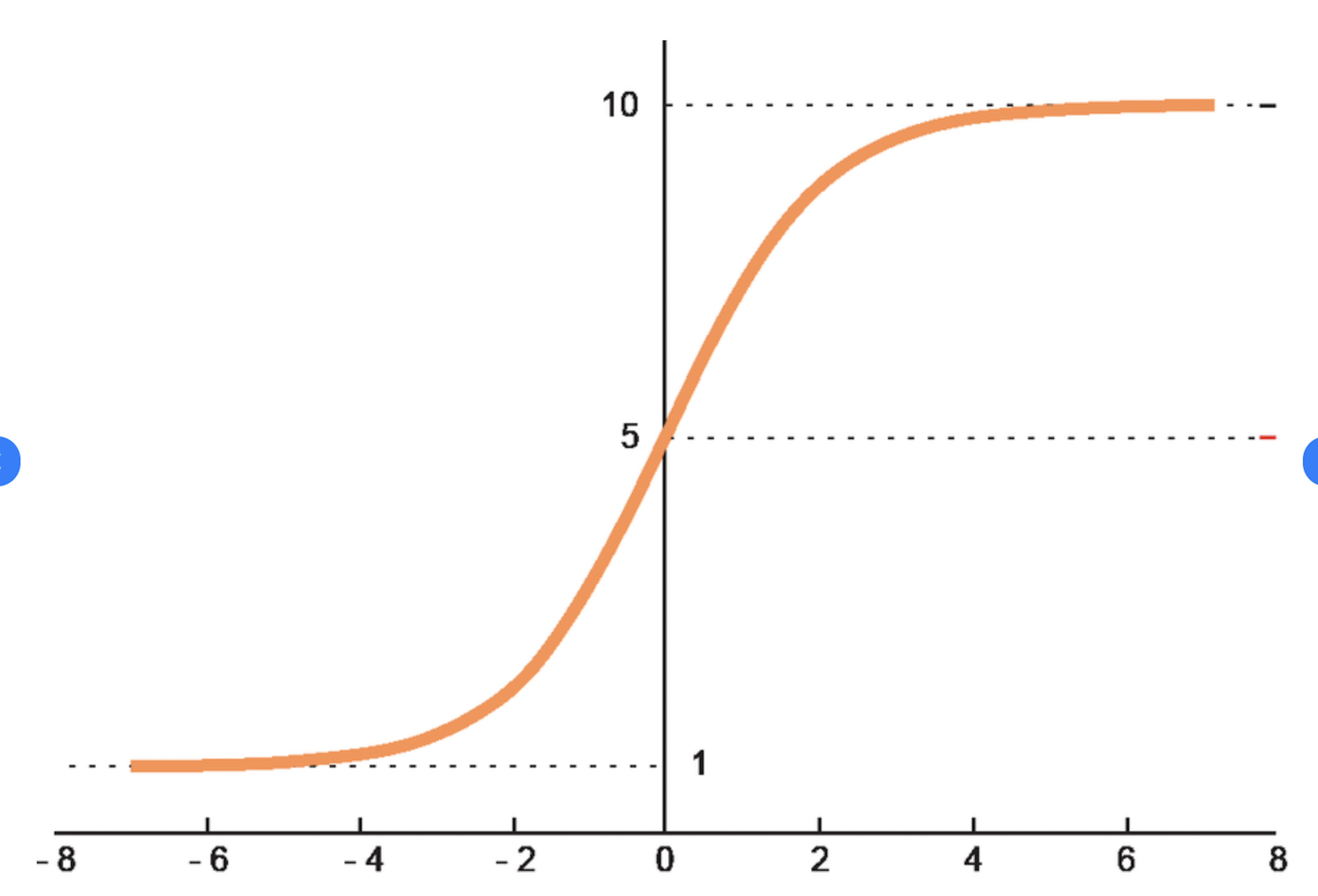

The Softmax Function's Role

Graphical representation of the softmax function

The softmax function plays a critical role in normalizing attention scores across the input data, ensuring a distributive focus that does not overly concentrate on a single part of the input.

Normalization: By converting attention scores into probabilities, the softmax function ensures that the total focus across all parts of the input sums to one.

Distributive Focus: This prevents the model from becoming fixated on specific input parts to the detriment of others, promoting a balanced approach to data interpretation.

Generation of Context Vectors

Context vectors are a crucial outcome of the attention mechanism. They serve as aggregated representations of the input data, refined through the lens of the model's focused attention.

Aggregation of Attended Information: Context vectors encapsulate the most relevant information as determined by the model's attention, providing a distilled representation of the input data.

Use in Prediction Tasks: These vectors are then used in subsequent stages of the model's processing, directly influencing prediction tasks by ensuring decisions are based on the most pertinent information.

Dynamic Nature of Attention Mechanisms

The dynamic adaptability of attention mechanisms is what sets them apart from traditional neural network architectures. Unlike static models, attention-equipped models can adjust their focus based on the evolving relevance of different parts of the input data.

Adaptation to Task Requirements: Whether the task involves understanding a piece of text or interpreting a complex image, the model's attention shifts to highlight the most relevant information.

Contrast with Traditional Architectures: Traditional neural network architectures lack this adaptability, often processing input data in a more uniform manner without the ability to dynamically shift focus.

In summary, the technical workings of attention mechanisms in deep learning represent a significant departure from traditional neural network approaches. By mimicking the human ability to focus selectively, these mechanisms enable models to process information more efficiently and accurately, particularly in complex scenarios such as those encountered in natural language processing tasks. The dynamic nature of attention, powered by weights, attention scores, the softmax function, and the generation of context vectors, underscores the unique approach of attention mechanisms to handling input data.

Types of Attention Mechanisms

Deep learning research and applications have seen the advent and evolution of various attention mechanisms, each tailored to enhance performance in specific scenarios. Understanding the nuances of these mechanisms grants insight into their pivotal role in advancing machine learning models.

Soft and Hard Attention

Soft Attention: Offers a more flexible approach by allowing the model to use all parts of the input data but with varying degrees of focus. This mechanism is differentiable, making it easier to train using standard backpropagation techniques.

Hard Attention: Selects specific parts of the input to attend to and ignores the rest. This selection process introduces non-differentiability, requiring alternative training methods like reinforcement learning. Hard attention is computationally more efficient but less flexible than soft attention.

Self-Attention

Central to the operation of the Transformer model, self-attention allows a model to weigh the importance of different parts of the input data relative to each other.

Significance: It has revolutionized sequence processing by enabling models to process input data in parallel, significantly reducing training times and improving performance on tasks like language translation and document summarization.

Global vs. Local Attention

Global Attention: Considers the entire input sequence when determining focus, beneficial for tasks where context from the entire sequence is necessary for accurate predictions.

Local Attention: Focuses on subsets of the input data, advantageous for tasks where only specific segments of the input are relevant to the task at hand. This approach can reduce computational complexity and improve efficiency.

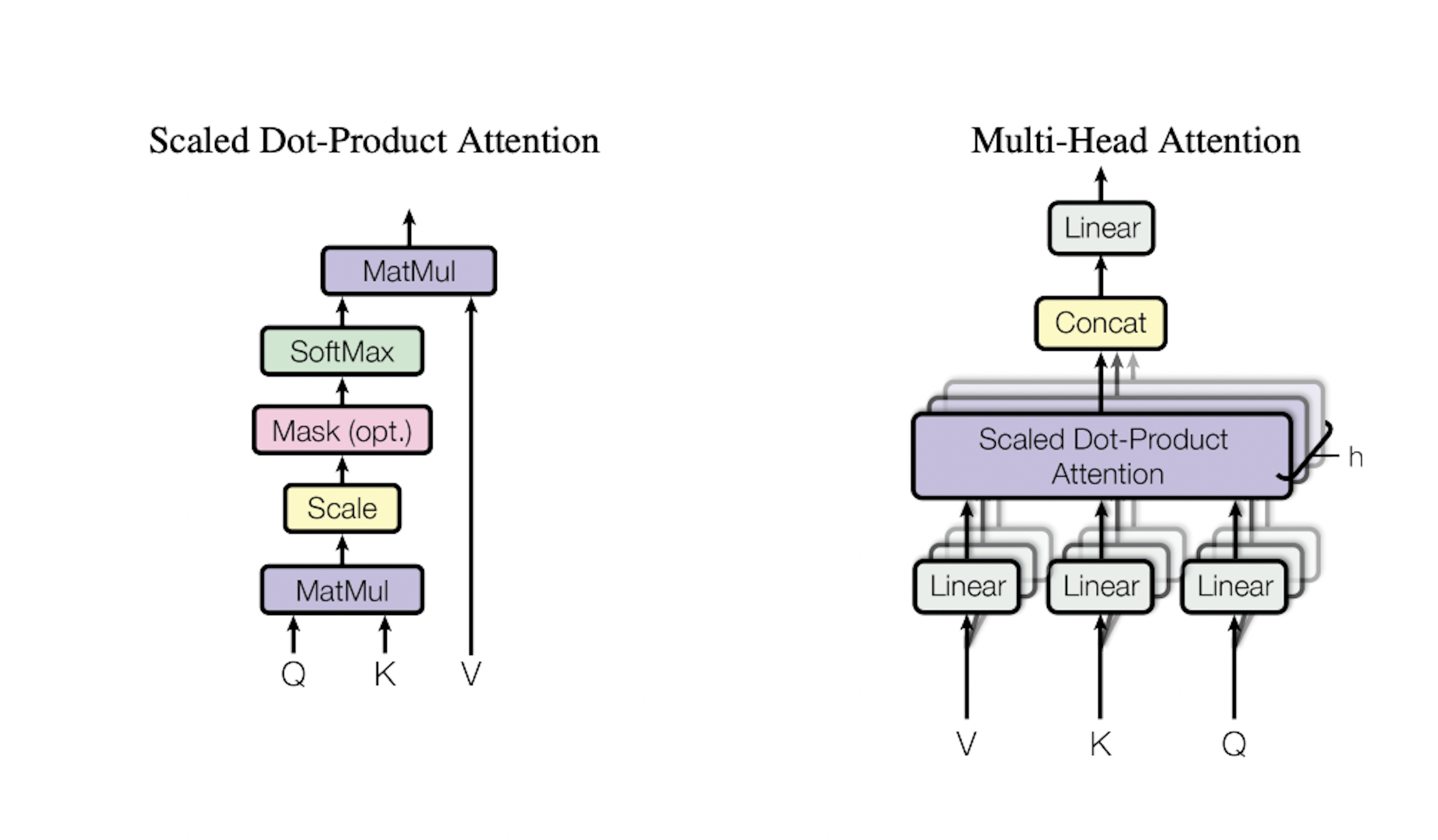

Multi-Head Attention

Allows the model to focus on different parts of the input simultaneously through multiple "attention heads." Each head learns to attend to different parts of the input, enabling a more comprehensive understanding.

Application: Critical in the Transformer architecture, as highlighted in the "Transformer Architecture" section from the course review on mitzon.com, demonstrating its efficacy in enhancing model capacity to capture various facets of the input data.

Hierarchical Attention

Designed to process nested or hierarchical sequences, such as documents organized into paragraphs, sentences, and words.

Benefit: It allows the model to first understand the importance of smaller units (e.g., words) within larger ones (e.g., sentences), and then aggregate this information in a structured manner, improving performance on complex language and image processing tasks.

The evolution of attention mechanisms continues to be a vibrant area of research, with ongoing efforts focused on developing more efficient and effective models. The journey from soft and hard attention to advanced concepts like multi-head and hierarchical attention showcases the field's dynamic nature, underscoring the quest for models that more closely mimic human attention and cognitive processes.

Source: Attention is all you need

Applications of Attention Mechanisms

Attention mechanisms in deep learning have revolutionized how models process and interpret vast amounts of data across various domains. By emulating the selective focus mechanism inherent in human cognition, these models achieve remarkable efficiencies and accuracies in tasks that were once considered challenging for machines.

Natural Language Processing (NLP)

Machine Translation: Attention mechanisms have dramatically improved machine translation by enabling models to focus on relevant parts of the source text, resulting in translations of higher quality.

Text Summarization: By identifying key sentences or phrases, attention mechanisms facilitate the generation of concise and informative summaries from longer texts.

Sentiment Analysis: These mechanisms help models to pinpoint the specific aspects of texts that indicate sentiment, enhancing the accuracy of sentiment analysis tasks.

These applications demonstrate the transformative power of attention mechanisms in handling complex language data, making them indispensable tools in the NLP toolkit.

Computer Vision

Image Recognition: Attention mechanisms allow models to focus on the most informative parts of an image, improving recognition accuracy even in cluttered scenes.

Object Detection: By prioritizing regions within an image, these mechanisms enhance the model's ability to detect and classify objects accurately.

The integration of attention mechanisms in computer vision tasks has led to significant advancements, enabling more precise and efficient image analysis.

Speech Recognition and Audio Processing

Attention mechanisms have contributed to substantial improvements in recognizing and transcribing spoken words with greater accuracy.

In audio processing, they help in isolating relevant sounds from noisy backgrounds, enhancing the clarity of the processed audio.

The application of attention mechanisms in audio-related tasks showcases their versatility and effectiveness in interpreting auditory data.

Recommender Systems

Attention mechanisms personalize user experiences by focusing on user preferences and behaviors, thereby improving the relevance of recommendations.

They help in filtering out noise from user data, ensuring that the recommendations are accurate and tailored to individual users.

Through the application of attention mechanisms, recommender systems have become more adept at understanding and predicting user preferences.

Healthcare

In diagnostic systems, attention mechanisms improve prediction accuracy by focusing on symptoms and data points most relevant to diagnosing a condition.

They enhance the efficiency of patient monitoring systems by prioritizing critical patient data for timely intervention.

The potential of attention mechanisms in healthcare is vast, with applications ranging from diagnostics to patient care optimization.

Robotics

Attention mechanisms enable robots to interact more effectively with their environment by focusing on relevant objects and tasks.

They facilitate advanced navigation and manipulation capabilities, allowing robots to perform complex tasks with higher precision.

The integration of attention mechanisms in robotics heralds a new era of intelligent, autonomous machines capable of performing intricate tasks.

Speculative Applications

The future impact of attention mechanisms could extend to enhancing cognitive computing systems, making them more adept at solving complex problems.

They hold potential in improving security systems through more focused surveillance and anomaly detection.

As attention mechanisms continue to evolve, their applications will likely expand, further blurring the lines between human and machine capabilities.

Advantages and Limitations of Attention Mechanisms in Deep Learning

Advantages

Increased Model Interpretability

Attention mechanisms illuminate the "black box" of deep learning, offering insights into the decision-making process by highlighting which parts of the input data the model prioritizes.

This transparency fosters trust and facilitates debugging, aiding in the fine-tuning of models for better performance.

Enhanced Efficiency and Accuracy

By zeroing in on relevant input segments, attention mechanisms drastically improve the handling of long sequences, a boon for tasks like machine translation and text summarization.

This selective focus leads to higher accuracy rates, as the model is not bogged down by irrelevant data.

Flexibility Across Tasks and Data Types

Attention mechanisms adapt seamlessly to a wide array of tasks, from image recognition in computer vision to sentiment analysis in NLP.

Their versatility extends to handling diverse data types, making them a universal tool in the deep learning arsenal.

Limitations

Computational Overhead

Hard attention mechanisms, which require iterative processing to select relevant parts of the input, can significantly increase computational demands.

This overhead can pose challenges in terms of resource allocation and can slow down the training and inference phases.

Integration Challenges

Incorporating attention mechanisms into existing models often necessitates substantial modifications to the architecture and training procedures.

This integration process demands careful tuning and optimization to achieve desired outcomes without destabilizing the model.

Risk of Overfitting

The intense focus of attention mechanisms on specific features of the training data can lead to overfitting, where the model performs well on training data but poorly on unseen data.

Balancing the model's attention to avoid over-relying on particular data features is crucial to maintaining robustness.

Ongoing Research

The realm of deep learning continuously evolves, with ongoing research dedicated to addressing the limitations of attention mechanisms. Innovations aim to reduce computational demands, streamline the integration process, and mitigate the risk of overfitting. By tackling these challenges, researchers strive to enhance the efficiency, adaptability, and reliability of attention mechanisms, solidifying their role as a cornerstone of deep learning advancements.