Capsule Neural Network

This article discusses the intricacies of Capsule Neural Networks, offering insights into their architecture, advantages, and the profound impact they could have on various applications.

Designed to surpass the constraints of traditional Convolutional Neural Networks (CNNs), CapsNets offer a more sophisticated approach to modeling spatial hierarchies in data. Introduced by the visionary Geoffrey Hinton and his team, this groundbreaking concept shifts from scalar outputs to vectorized capsules, enabling a deeper, more nuanced understanding of data relationships. This article discusses the intricacies of Capsule Neural Networks, offering insights into their architecture, advantages, and the profound impact they could have on various applications.

What are Capsule Neural Networks (CapsNets)?

Capsule Neural Networks (CapsNets) stand at the forefront of a significant paradigm shift in artificial intelligence, particularly when it comes to deciphering complex data structures. This avant-garde neural network architecture aims to address and outperform the inherent limitations found in traditional Convolutional Neural Networks (CNNs). The essence of CapsNets revolves around a more refined modeling of spatial hierarchies within data, which is pivotal for machines to understand the world in a way that mirrors human cognition.

Innovation by Geoffrey Hinton: The brainchild of Geoffrey Hinton and his dedicated team, CapsNets emerge as a beacon of innovation. By transitioning from scalar outputs, which are common in CNNs, to vectorized capsules, CapsNets introduce a dynamic approach to representing data relationships.

Vectorized Capsules: At the heart of CapsNets lie the vectorized capsules, which are essentially groups of neurons working together to detect and interpret various properties and spatial orientations of objects within data. This vectorized approach allows for a more detailed and nuanced understanding of the intricate relationships that exist within complex data structures.

Spatial Hierarchies: Traditional CNNs often struggle with modeling spatial hierarchies effectively, which is where CapsNets shine. By leveraging the power of capsules and the innovative dynamic routing mechanism, CapsNets excel in recognizing and preserving spatial hierarchies, making them adept at understanding and interpreting data in a way that's closer to human perception.

Capsule Neural Networks herald a new era in artificial intelligence, promising to bridge the gap between machine learning and true data comprehension. As we delve deeper into the architecture of CapsNets and explore their advantages and applications, it becomes evident that this technology holds the potential to revolutionize various fields, from image recognition to natural language processing.

Architecture of Capsule Neural Networks

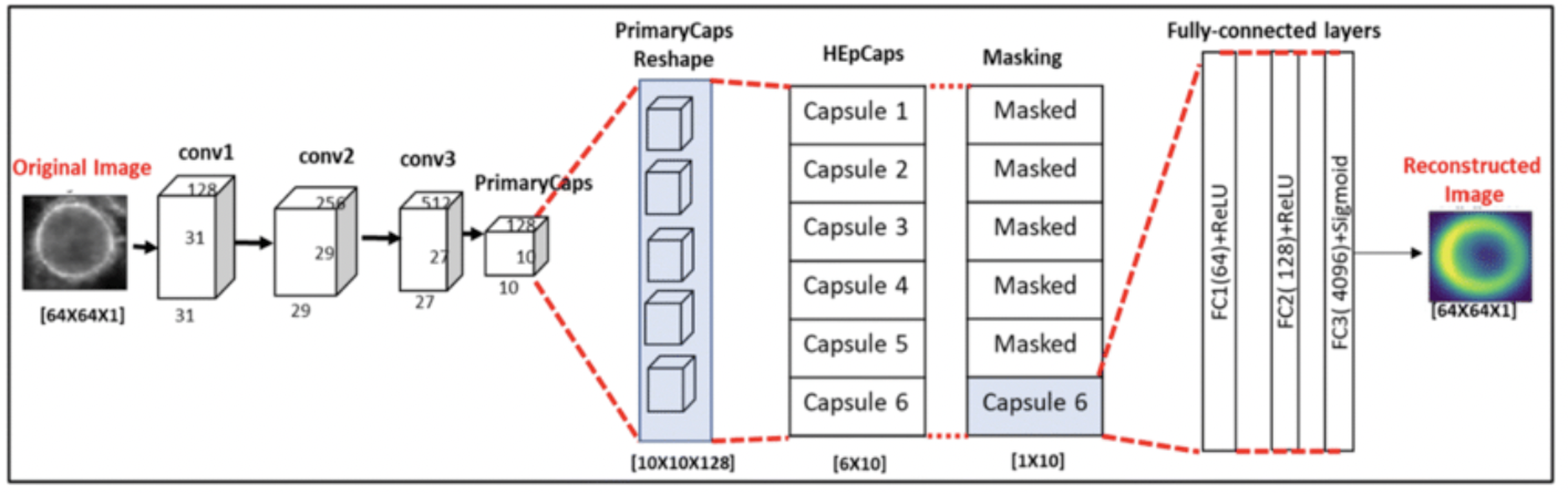

Capsule Neural Network architecture proposal (Source)

The architecture of Capsule Neural Networks (CapsNets) represents a significant evolution in the realm of artificial intelligence, specifically in processing and understanding intricate data structures. Unlike traditional neural network models, CapsNets introduce a novel approach through the use of capsules, dynamic routing algorithms, and unique functions like Squash. These components work in tandem to ensure a more nuanced interpretation of data, which is crucial for tasks requiring a deep understanding of spatial relationships and hierarchical structures.

Capsules: The Building Blocks

Capsules are the foundational elements of Capsule Neural Networks. Each capsule is essentially a group of neurons that operates together to detect specific types of features within data. Unlike single neurons that output scalar values in conventional neural networks, capsules generate vector outputs. This vectorial representation encodes not just the presence of a particular feature but also its various properties, such as orientation, scale, and texture. This multi-dimensional output offers a richer and more detailed understanding of data, paving the way for more accurate interpretations and predictions.

Vector Outputs: Capsules output vectors that represent both the existence and attributes of features within data.

Feature Detection: They specialize in recognizing specific types of features, contributing to the network's ability to model complex spatial hierarchies.

Dynamic Routing Algorithm

A pivotal aspect of CapsNet architecture is the Dynamic Routing algorithm. This mechanism ensures that the vector outputs from lower-level capsules are sent to the most appropriate higher-level capsules. Such a process is critical for maintaining the spatial and hierarchical relationships between features across different levels of abstraction.

Efficient Data Processing: By selectively routing information, the algorithm reduces computational complexity, making the network more efficient.

Preservation of Spatial Hierarchies: It enables the network to preserve and understand spatial relationships between features, a task that traditional CNNs struggle with.

The Squash Function

The Squash function plays a crucial role in normalizing the vector outputs of capsules. This non-linear function ensures that the length of the output vector, which represents the probability of a feature's presence, is squashed to a value between 0 and 1. The direction of the vector, indicative of the feature's properties, remains unchanged. This normalization is vital for maintaining the integrity of the feature representations across the network.

Normalization: Squashes the vector's length to a value between 0 and 1, maintaining the direction.

Integrity of Representation: Ensures the consistent representation of feature properties throughout the network.

Primary Capsules: The First Layer

At the base of the CapsNet architecture lie the Primary Capsules. This layer of capsules is directly connected to the input data and is responsible for the initial detection of various entities within an image. They act as the network's eyes, identifying basic features that higher-level capsules will further process.

Initial Feature Detection: Identifies basic features from the input data, setting the stage for more complex processing.

Foundation for Hierarchical Processing: Serves as the base layer for the hierarchical structure of CapsNets, enabling the modeling of complex spatial relationships.

The architecture of Capsule Neural Networks marks a significant departure from traditional neural network models. With capsules that capture and represent a myriad of feature properties, a dynamic routing algorithm that efficiently maps these features across the network, and functions like Squash that normalize and maintain the integrity of these representations, CapsNets offer a promising avenue towards achieving a more profound understanding of complex data structures. Through the meticulous design of its architecture, CapsNets stand at the vanguard of artificial intelligence research, holding the potential to unlock new possibilities in machine learning and data interpretation.

Advantages and Drawbacks of Capsule Neural Networks

Capsule Neural Networks (CapsNets) have emerged as a groundbreaking advancement in the artificial intelligence sphere, promising to address some of the critical limitations faced by traditional Convolutional Neural Networks (CNNs). However, as with any technological innovation, CapsNets bring their own set of challenges alongside their benefits. This section delves into the nuanced advantages and inherent drawbacks of CapsNets, shedding light on their potential to revolutionize various applications while also highlighting the obstacles that researchers must navigate.

Advantages of Capsule Neural Networks

Enhanced Generalization to New Viewpoints: One of the standout features of CapsNets is their ability to generalize to new viewpoints without extensive data augmentation. Unlike CNNs, which require various transformations of data to learn viewpoint invariance, CapsNets inherently understand the spatial relationships and hierarchies, significantly reducing the need for augmented data.

Reduced Need for Large Labeled Datasets: CapsNets have the potential to perform well with smaller labeled datasets. This advantage arises from their ability to capture and encode a richer set of features and relationships within the data, thereby requiring fewer examples to achieve high levels of accuracy.

Superior Recognition of Hierarchical Relationships: By modeling the part-whole relationships more effectively, CapsNets offer enhanced recognition of hierarchical structures within data. This capability is vital for tasks where understanding the arrangement and relationship between components is crucial, such as in complex image recognition and natural language processing tasks.

Drawbacks of Capsule Neural Networks

Increased Computational Requirements: The sophisticated architecture of CapsNets, including the dynamic routing mechanism and vector outputs from capsules, leads to higher computational demands. This increase in computational complexity can make the training and deployment of CapsNets more resource-intensive compared to traditional CNNs.

Challenges in Training Stability: The training of CapsNets can sometimes be unstable, with the network struggling to converge to a satisfactory solution. This instability is partly due to the complex interactions between capsules and the dynamic routing process, which can be sensitive to the initial configuration and hyperparameter settings.

Addressing Spatial Relationships and Pose Estimation

CapsNets excel in understanding spatial relationships and pose estimation, a critical aspect where traditional CNNs often falter. The vector outputs of capsules encode not just the features but also their spatial orientation and relationships, enabling CapsNets to maintain a consistent recognition of objects even when viewed from different angles or in various configurations. This ability to preserve spatial hierarchies makes CapsNets particularly promising for applications like 3D modeling and augmented reality, where accurate pose estimation is key.

Optimizing CapsNet Architecture for Various Applications

Optimizing the architecture of CapsNets for diverse applications presents a significant challenge for researchers. The unique advantages of CapsNets, such as their proficiency in handling spatial relationships, must be balanced against their computational demands and training stability issues. Innovations in efficient routing algorithms, capsule design, and training methodologies are critical areas of ongoing research aimed at making CapsNets more accessible and practical for a broader range of applications.

In summary, Capsule Neural Networks offer a compelling alternative to traditional CNNs, with their superior handling of spatial relationships, reduced reliance on data augmentation, and the ability to recognize complex hierarchical structures. However, realizing their full potential requires overcoming significant challenges, including their increased computational requirements and the need for stable training algorithms. As research in this area progresses, CapsNets hold the promise of driving forward the capabilities of artificial intelligence in understanding and interpreting the world around us.

Capsule Neural Networks vs. Convolutional Neural Networks

In the realm of artificial intelligence and image processing, both Capsule Neural Networks (CapsNets) and Convolutional Neural Networks (CNNs) stand out as pivotal technologies. Yet, their approach to interpreting and understanding images marks a significant divergence in methodology and outcome. This section endeavors to unpack the fundamental differences between CapsNets and CNNs, focusing on how these differences affect tasks like image classification and object detection.

Fundamental Approach to Image Understanding

CapsNets: CapsNets employ a vectorized approach to feature detection. Each capsule, or group of neurons, is responsible for identifying various properties of a particular feature, such as orientation and position. This method allows CapsNets to maintain the spatial hierarchies between features, offering a deeper level of understanding of the image data.

CNNs: Conversely, CNNs utilize scalar outputs to detect features within an image. While effective for many applications, this approach can struggle with understanding the spatial relationships between features. CNNs often require extensive data augmentation, such as rotations and scaling, to improve their ability to generalize from their training data.

Rotational Invariance and Part-Whole Relationships

CapsNets: CapsNets excel in dealing with rotational invariance and understanding part-whole relationships. Thanks to their vectorized outputs, CapsNets can recognize an object regardless of its orientation, without needing the exhaustive data augmentation that CNNs rely on. This capability allows CapsNets to offer more nuanced interpretations of image data, recognizing objects and their features in various configurations and viewpoints.

CNNs: The scalar output of CNNs means they often fail to recognize an object if it appears in a new or unseen orientation. CNNs can struggle with part-whole relationships, sometimes failing to understand how individual components relate to the whole object. This limitation can hinder their performance in complex image recognition tasks.

Implications for Image Classification and Object Detection

CapsNets: The adeptness of CapsNets at understanding spatial hierarchies and maintaining rotational invariance positions them as potentially revolutionary for tasks requiring a nuanced understanding of image content. Their ability to accurately classify images and detect objects without extensive data augmentation or preprocessing could lead to more efficient and effective AI systems for applications ranging from medical imaging to autonomous vehicle navigation.

CNNs: Despite their limitations, CNNs continue to be widely used for image classification and object detection, thanks to their relative simplicity and the extensive body of research supporting their use. However, the emergence of CapsNets challenges the supremacy of CNNs in these domains, suggesting a shift towards more sophisticated neural network architectures that can offer a deeper understanding of image data.

In summary, the comparison between Capsule Neural Networks and Convolutional Neural Networks highlights a pivotal shift in how AI systems interpret and understand images. CapsNets, with their vectorized approach to feature detection and inherent understanding of spatial hierarchies, present a promising alternative to CNNs, particularly for applications requiring a nuanced comprehension of image data. As research in this area continues to evolve, the potential for CapsNets to redefine the landscape of image classification and object detection becomes increasingly apparent, marking a significant advance in the field of artificial intelligence.

Applications of Capsule Neural Networks

Capsule Neural Networks (CapsNets) have started to carve a niche for themselves across various domains, offering innovative solutions that challenge traditional methods. The unique architecture of CapsNets, which emphasizes the preservation of hierarchical relationships in data, has proven particularly beneficial. This section explores the transformative applications of CapsNets in medical image analysis, facial recognition, and natural language processing, underscoring their potential to redefine the landscape of artificial intelligence.

Medical Image Analysis

Disease Diagnosis: CapsNets have shown exceptional promise in enhancing the accuracy of diagnosing diseases from medical scans. Traditional Convolutional Neural Networks (CNNs) often struggle with the variability and complexity of medical images. CapsNets, on the other hand, excel in these conditions due to their ability to understand spatial hierarchies and relationships within the data. This capability allows for more accurate identification of pathological features in images, such as tumors in MRI scans or abnormalities in X-ray images.

Segmentation Tasks: Beyond diagnosis, CapsNets have been applied to segmentation tasks in medical imaging, such as delineating the boundaries of organs or lesions. Their proficiency in preserving spatial relationships makes them adept at accurately segmenting complex anatomical structures, which is crucial for surgical planning and the assessment of disease progression.

Facial Recognition

Source: Microsoft

Enhanced Security Systems: In the realm of security, CapsNets have been instrumental in refining biometric authentication systems. Their ability to recognize and interpret facial features with high precision, regardless of variations in angle, lighting, or facial expressions, significantly enhances the reliability of facial recognition systems. This improvement not only bolsters security measures but also streamlines user authentication processes in devices and applications.

Emotion Detection: Beyond mere identification, CapsNets have been deployed in emotion detection algorithms. By analyzing facial expressions in real-time, these networks offer nuanced insights into an individual's emotional state, with applications ranging from customer service enhancements to the development of empathetic AI assistants.

Natural Language Processing

Sentiment Analysis: CapsNets have made notable strides in refining sentiment analysis in customer feedback. Unlike traditional models that may overlook the subtleties of language, CapsNets excel in detecting nuanced emotional tones and contextual clues within text. This refined analysis enables businesses to garner deeper insights into customer satisfaction and tailor their services or products more effectively.

Language Translation: The application of CapsNets extends to the realm of machine translation, where their architecture allows for a better understanding of syntax and semantics. This advantage translates to more accurate and contextually appropriate translations, facilitating smoother communication across language barriers and enhancing the accessibility of digital content globally.

The exploration of Capsule Neural Networks in these domains not only showcases their versatility and effectiveness but also illuminates a path toward more sophisticated and intuitive AI systems. As research and experimentation with CapsNets continue to expand, we can anticipate further breakthroughs that will push the boundaries of what is possible in artificial intelligence, offering solutions that are not only more accurate but also inherently more aligned with the complex structures and relationships inherent in real-world data.