SQuAD

This blog post delves into the essence of the SQuAD dataset, exploring its creation, structure, evolution, and its significant role in propelling the field of natural language processing (NLP) technologies.

Have you ever pondered the question, "How do machines learn to comprehend and answer questions just like humans?" The answer lies in the monumental strides taken in the realm of machine learning and, more specifically, within the datasets that train these intelligent systems. One dataset, in particular, stands out as a cornerstone in advancing these technologies: the Stanford Question Answering Dataset (SQuAD). With over 100,000 question-answer pairs derived from Wikipedia articles, SQuAD has become a pivotal resource for training and evaluating machine learning models on the task of question answering. This blog post delves into the essence of the SQuAD dataset, exploring its creation, structure, evolution, and its significant role in propelling the field of natural language processing (NLP) technologies. Are you ready to uncover how this dataset has become a global benchmark in the development of question answering systems and what makes it so critical for the progress of machine learning? Let's dive in.

Section 1: What is the SQuAD Dataset?

The Stanford Question Answering Dataset (SQuAD) stands as a pioneering collection developed by Stanford University, specifically designed to train and evaluate machine learning models on the intricate task of question answering. Its primary aim? To drive forward the capabilities of natural language processing (NLP) technologies.

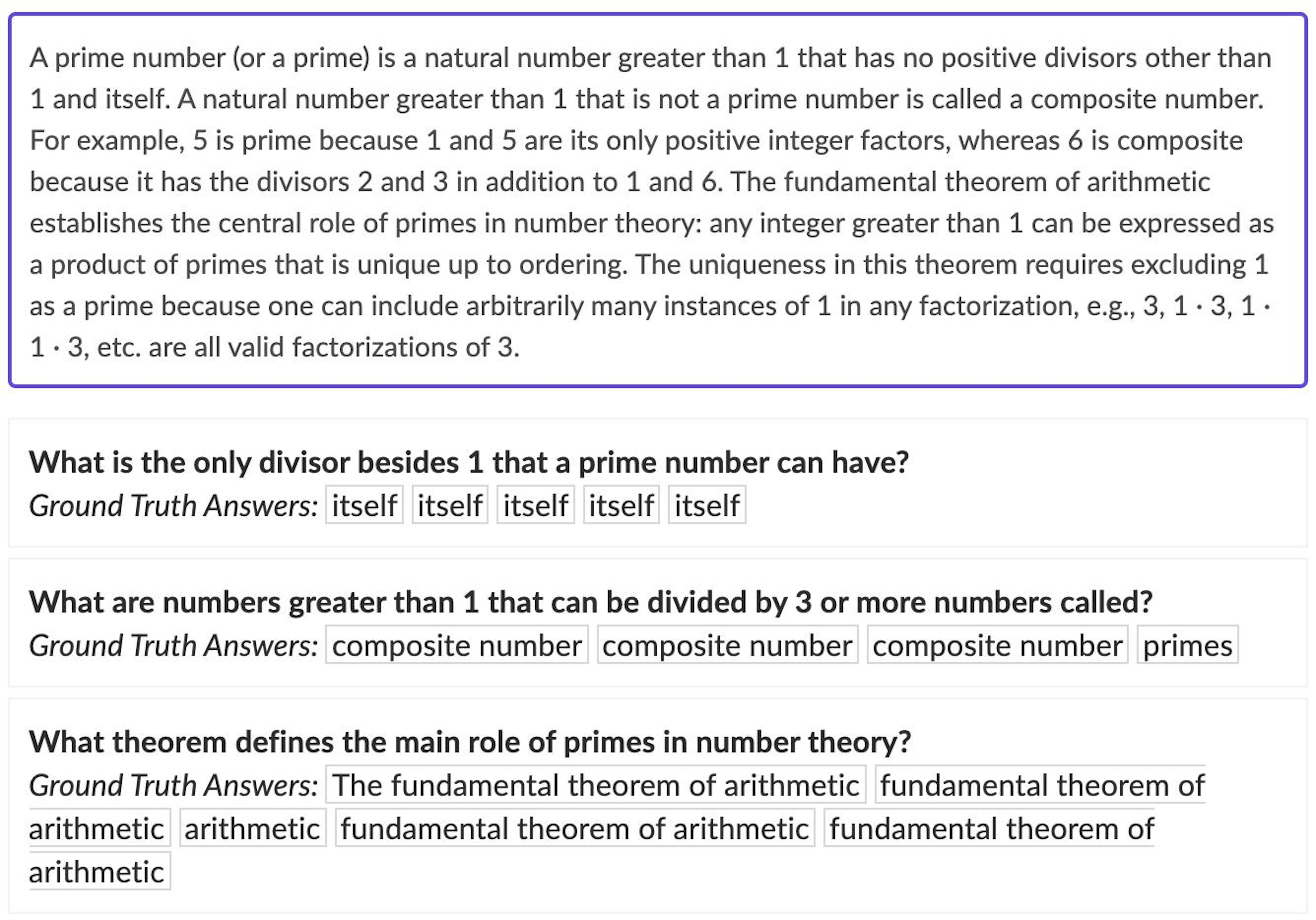

At its core, SQuAD leverages Wikipedia articles, offering a broad and diverse array of reading passages. Each passage forms the basis for question-answer pairs, ensuring a wide-ranging scope that challenges and refines the understanding capabilities of AI models. A concise definition provided by h2o.ai encapsulates SQuAD's essence perfectly, highlighting its reliance on real-world content to simulate human-like comprehension and response mechanisms.

Structurally, the dataset boasts more than 100,000 question-answer pairs across over 500 articles, as information from Kaggle reveals. This format, where answers are directly extracted as spans of text from the articles, presents a realistic and complex challenge for models to navigate and interpret.

Evolution is key to SQuAD's success. Initially introduced in its first version, the dataset underwent significant enhancements to emerge as SQuAD 2.0. This iteration introduced unanswerable questions, elevating the challenge for NLP models to distinguish between what can and cannot be answered based on the provided passages.

Open accessibility marks another cornerstone of the SQuAD dataset's philosophy. Available for research and development purposes, the dataset encourages exploration and innovation within the field. Stanford's official SQuAD explorer page serves as the gateway to this treasure trove of data, inviting academics and developers alike to delve into its depths.

The machine learning community, spanning across the globe, has embraced SQuAD wholeheartedly. Its role in benchmarking the progress of question answering systems cannot be overstated, with platforms like the TensorFlow Datasets catalog and Hugging Face datasets page featuring SQuAD prominently.

Ultimately, the significance of the SQuAD dataset transcends its immediate utility. It spearheads advancements in machine learning, particularly in the arenas of reading comprehension and question answering. The research and development it has stimulated within both academic and industrial spheres underscore its pivotal role in shaping the future of AI technologies.

Section 2: How is the SQuAD Dataset used in NLP?

The SQuAD dataset has carved out a niche for itself in the domain of natural language processing (NLP), serving as a benchmark and a tool for advancing the capabilities of AI in understanding and processing human language. Its applications span across various facets of NLP, driving innovation and enhancing the functionality of machine learning models.

Training and Evaluating QA Systems

Benchmarking Excellence: The SQuAD dataset serves as a critical benchmark for evaluating the performance of NLP models in question answering (QA) systems. Its inclusion among popular benchmark datasets on Papers with Code evidences its widespread acceptance and utility in gauging model performance across a variety of NLP tasks.

Diverse Applications: From basic fact retrieval to complex inferencing, SQuAD supports a spectrum of QA tasks, challenging models to demonstrate comprehension on par with human understanding.

Fine-tuning Pre-trained Models

Boosting QA Capabilities: By fine-tuning models like BERT and XLNet on the SQuAD dataset, researchers achieve superior question answering capabilities. This process, as outlined by iq.opengenus.org, is pivotal in adapting general language models to specialized QA tasks.

Critical for Specialization: The fine-tuning process underscores the importance of tailoring general models to perform specialized tasks, ensuring that the AI systems can handle the nuances and complexities of real-world language use.

Role in Research

Challenging the Status Quo: SQuAD's complexity and diversity provoke models to improve their natural language understanding, driving research in new model architectures, training algorithms, and NLP techniques.

A Testbed for Innovation: Its role in research cannot be overstated, with SQuAD acting as a proving ground for experimental approaches and cutting-edge developments in machine learning.

Academic Use

Educational Resource: In academic settings, SQuAD enriches courses and research projects, teaching advanced NLP concepts and offering hands-on learning experiences through platforms like Coursera.

Exploring NLP Concepts: The dataset facilitates a deeper understanding of machine learning and NLP principles among students and researchers, fostering a new generation of AI specialists.

Real-world Applications

Enhancing User Interactions: Training on SQuAD helps develop applications like virtual assistants and customer service bots that process and understand user queries more effectively.

Improving Information Retrieval: The dataset's impact extends to information retrieval systems, enabling them to deliver precise and relevant answers to user inquiries.

Contribution to Transfer Learning

Facilitating Language Adaptation: Models trained on SQuAD can be adapted to other languages and domains with minimal additional training, showcasing the dataset's contribution to transfer learning in NLP.

Multilingual Extensions: The XQuAD and MLQA datasets exemplify multilingual extensions of SQuAD, broadening the scope of language models to understand and interact in diverse linguistic environments.

Ongoing Evolution

Continuous Improvement: The SQuAD dataset is in a state of perpetual evolution, with ongoing efforts to expand its scope, enhance its quality, and overcome existing limitations.

Anticipating Future Challenges: The community eagerly anticipates future versions of SQuAD, poised to tackle even more sophisticated NLP challenges and push the boundaries of machine understanding.

The journey of the SQuAD dataset in the landscape of NLP is a testament to its foundational role in advancing the field. From training state-of-the-art models to fostering research and development, SQuAD continues to shape the future of machine learning and artificial intelligence.