Model Interpretability

This article delves deep into the essence of model interpretability, shining a light on its crucial role across various sectors, from healthcare to finance.

With a staggering 91% of data professionals emphasizing the importance of interpretability for business stakeholders, it's clear that understanding the "why" behind model predictions isn't just a preference—it's a necessity.

This article delves deep into the essence of model interpretability, shining a light on its crucial role across various sectors, from healthcare to finance. You'll discover how interpretability acts as the linchpin in balancing model complexity with transparency, ensuring fairness, and adhering to regulatory demands. But more importantly, how does it foster trust among end-users? Let's embark on this exploration together, unearthing the significance of making the complex understandable.

What is Model Interpretability

Interpretability in machine learning signifies the extent to which a human can understand and trust the decisions made by a model. It embodies the crucial bridge linking sophisticated computational models with their practical applications, where trust and transparency reign supreme. Consider the following facets of model interpretability:

Healthcare and Life Sciences: Interpretability holds paramount importance in healthcare and life sciences. Here, decisions can quite literally mean the difference between life and death. Understanding the rationale behind a model's prediction ensures that healthcare professionals can trust these tools, leading to better patient outcomes. To learn more about healthcare and life sciences, click here.

Complexity vs. Interpretability: A delicate balance exists between a model's complexity and its interpretability. As algorithms become more intricate, comprehending their decision-making processes becomes more challenging. This tug-of-war necessitates a careful approach to design models that are both powerful and understandable.

Ensuring Fairness and Compliance: The role of interpretability extends to debugging models, enhancing their performance, and ensuring they make unbiased decisions. This aspect is especially crucial in sectors like finance and healthcare, where explainability isn't just ethical—it's legally mandated.

Fostering Model Trust: At the heart of interpretability lies the concept of 'model trust.' When end-users understand how a model arrives at its predictions, their confidence in these tools skyrockets. This trust is foundational, especially in applications where users rely on model predictions for critical decision-making.

In essence, model interpretability weaves the thread of trust through the fabric of machine learning applications. It ensures that as we venture further into the age of AI, our reliance on these models is built on a solid foundation of understanding and transparency.

Types of Model Interpretability Methods

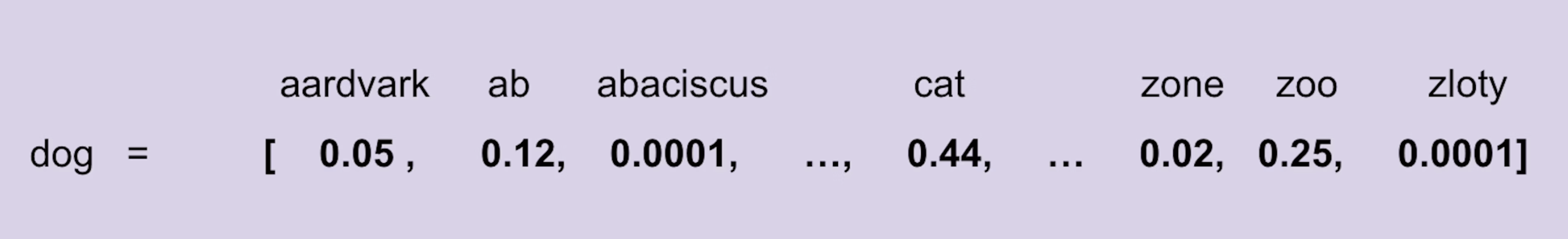

An example of interpretable embeddings comes in this early form of word vectorization, in which every entry corresponds to the probability that two words will appear in the same document. To learn more, click here.

In the quest for transparency within machine learning models, various interpretability methods have emerged. A significant survey by Two Sigma categorizes these methods into three primary types: application-grounded, human-grounded, and functionally grounded evaluation. Each of these methods offers a unique perspective on model interpretability, catering to different needs and scenarios.

Application-Grounded Evaluation

Application-grounded evaluation stands out for its emphasis on practical application. This method involves:

Direct involvement of a human in the loop: It necessitates that a person directly interacts with the model within a real-life scenario, ensuring that the interpretability methods are grounded in practical use.

Tailored to specific applications: The evaluation focuses on how well the interpretability method aids in understanding model decisions within a particular domain, such as healthcare diagnostics or financial forecasting.

Strengths: Provides direct feedback on the utility of interpretability methods in real-world applications, ensuring that the insights are actionable and relevant.

Weaknesses: Time-consuming and resource-intensive, as it requires setting up specific application scenarios and direct human involvement.

Human-Grounded Evaluation

Human-grounded evaluation takes a more general approach:

Simplified tasks to assess interpretability: Instead of in-depth application scenarios, this method uses simplified tasks that evaluate whether model explanations make sense to average users.

Tools and techniques: Popular tools like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are often used in this evaluation type to provide insights into how model decisions are made.

Strengths: More scalable than application-grounded evaluation, allowing for quicker, broader insights into model interpretability.

Weaknesses: May not fully capture the complexity of real-world applications, potentially oversimplifying the interpretability needs of specific scenarios.

Functionally Grounded Evaluation

Functionally grounded evaluation focuses on theoretical aspects:

Assessment based on predefined criteria: This method evaluates interpretability based on whether a model or method meets certain predefined theoretical criteria, without the direct need for human judgment.

Objective and structured: Offers a structured way to assess models based on clear, objective criteria.

Strengths: Efficient and straightforward, providing a quick means to gauge basic interpretability without extensive human involvement.

Weaknesses: Lacks the nuanced understanding that human judgment can provide, possibly overlooking practical interpretability challenges.

The Trend Towards Hybrid Approaches

Interestingly, a growing trend points towards hybrid approaches that amalgamate elements from all three types of evaluations. This holistic strategy aims to harness the strengths of each method, achieving a more comprehensive understanding of model interpretability. By combining the practical insights of application-grounded evaluation, the scalability of human-grounded evaluation, and the objectivity of functionally grounded evaluation, these hybrid approaches represent the forefront of interpretability research.

In sum, understanding the strengths and weaknesses of each interpretability method is crucial for selecting the appropriate approach for a given scenario. As the landscape of machine learning continues to evolve, so too will the strategies for making these models transparent and understandable to their end-users.

Practical Application of Model Interpretability

Model interpretability extends far beyond a theoretical concept, embedding itself into the very fabric of industries that shape our daily lives. Healthcare, finance, and criminal justice represent sectors where the application of interpretability in machine learning models not only enhances operational efficiency but also reinforces ethical standards and compliance with regulatory mandates.

Healthcare: A Lifeline for Diagnostic Precision

In the realm of healthcare, the stakes couldn't be higher. Here, interpretability translates into the ability to uncover the rationale behind diagnostic and treatment recommendations made by AI systems. For instance, when a machine learning model identifies a novel pattern in patient data suggesting a predisposed genetic condition, clinicians need to understand the "why" behind this prediction to trust and act upon it. AWS documentation underscores the criticality of interpretability in healthcare, advocating for models that clinicians can interrogate for the evidence leading to their conclusions. Such transparency:

Facilitates trust between clinicians and the AI tools they rely on.

Improves patient outcomes by enabling more informed decision-making.

Ensures regulatory compliance, meeting stringent standards for medical devices and software.

Finance: Decoding the Logic of Loan Approvals

The finance sector, particularly in credit scoring models, relies heavily on interpretability. Understanding why a loan application gets approved or denied is crucial not just for regulatory compliance but also for maintaining fairness and transparency. According to AWS documentation, interpretability in financial models helps identify the factors influencing these decisions. This clarity allows financial institutions to:

Provide applicants with actionable feedback, helping them improve their creditworthiness.

Detect and mitigate biases in loan approval processes.

Ensure adherence to financial regulations that mandate transparency in credit scoring.

Criminal Justice: The Beacon of Fairness

In criminal justice, the deployment of predictive policing and risk assessment models has sparked a significant ethical debate. The crux of the matter lies in ensuring these models do not perpetuate biases or injustices. Implementing interpretability in these systems plays a pivotal role in:

Ensuring transparency in how risk assessments are conducted.

Facilitating the auditability of models to uncover any inherent biases.

Promoting fairness by making the decision-making process visible and understandable to all stakeholders.

Navigating the Challenges

Despite the clear benefits, the road to achieving interpretability, especially in complex models like deep neural networks, is fraught with challenges. The intricate architecture of these models often makes it difficult to pinpoint the exact rationale behind specific decisions. However, advancements in interpretability methods and tools are gradually overcoming these hurdles, paving the way for more transparent AI applications.

Advocating for a Data-Centric Approach

DCAI CSAIL MIT's introduction to Interpretable ML champions a data-centric approach to model development, emphasizing the importance of considering interpretability from the outset. This proactive stance ensures that models are not only accurate but also understandable. By prioritizing data quality and transparency in model design, developers can:

Avoid the pitfalls of biased or unexplainable models.

Build trust with end-users by providing clear insights into how predictions are made.

Foster innovation in fields where interpretability is non-negotiable, such as healthcare and criminal justice.

The journey towards fully interpretable models is ongoing, with each step forward unlocking new possibilities for applying AI in ways that are both impactful and understandable. As this field evolves, so too will our ability to harness the power of AI for the greater good, ensuring that decisions that affect human lives are made with the utmost clarity and fairness.

Model Interpretability vs Explainability

In the ever-evolving landscape of machine learning and artificial intelligence, two terms frequently surface, sparking considerable debate among developers and stakeholders alike: model interpretability and explainability. While these concepts intertwine, understanding their distinctions is crucial in the development and deployment of AI models, especially in sensitive sectors like healthcare and finance where decisions have profound implications.

Defining the Terms

Model Interpretability delves into the inner workings of machine learning models, striving for a comprehensive understanding of how models reach their conclusions. It demands a granular level of detail, enabling developers and users to trace and understand the decision-making path of the model.

Model Explainability, on the other hand, focuses on the outcomes, providing insights into the decisions made by the model without necessarily detailing the process. It answers the 'what' rather than the 'how', making it slightly more accessible but less detailed compared to interpretability.

The Importance of Detail

Interpretability requires a deeper dive into the model's mechanics, often necessitating a more sophisticated understanding of machine learning principles. This depth:

Ensures developers can troubleshoot and refine models with precision.

Facilitates regulatory compliance, particularly in sectors where understanding the decision process is mandatory.

Helps in identifying and mitigating biases within the model, promoting fairness.

Explainability, with its broader overview, allows stakeholders without technical expertise to grasp the model's decisions, fostering trust and acceptance.

Regulatory Compliance and Healthcare

Choosing between interpretability and explainability becomes pivotal in contexts where regulatory compliance enters the fray. In healthcare, for instance, regulatory bodies demand clear explanations of diagnostic and treatment recommendations made by AI. Scenarios where interpretability takes precedence include:

Developing models for diagnosing complex diseases, where understanding the rationale behind each diagnosis is crucial.

Creating treatment recommendation systems, where the stakes of each decision are high, and the reasoning must be transparent.

Conversely, explainability may suffice in less critical applications, such as patient monitoring systems, where the focus is on tracking and reporting rather than diagnosing.

Balancing Act: Accuracy vs. Understandability

A key challenge in AI development is balancing model accuracy with interpretability or explainability. Insights shared on LinkedIn highlight this dilemma, noting that as models, particularly deep learning models, become more accurate, they often lose transparency. Strategies to navigate this balance include:

Employing hybrid models that combine interpretable and complex models to achieve both accuracy and transparency.

Utilizing post-hoc interpretation techniques, such as LIME or SHAP, to shed light on complex model decisions.

The Future of Model Interpretability and Explainability

The quest for making complex models both interpretable and explainable is at the forefront of AI research. Emerging AI technologies and methodologies aim to:

Develop novel approaches that enhance the transparency of deep learning models without compromising their performance.

Create standards and frameworks for interpretability and explainability that align with regulatory requirements and ethical considerations.

As AI continues to evolve, the focus on making models not just powerful but also understandable and trustworthy will remain paramount. The future direction of research in this area is poised to revolutionize how we develop, deploy, and interact with AI systems, ensuring they serve humanity in the most transparent and ethical way possible.