Every developer building voice AI knows this problem: how do you know when someone is actually done talking? Get it wrong, and your agent either interrupts mid-sentence or leaves robotic pauses.

That changes today.

Today, we're launching Flux - the first speech recognition model that knows when someone is actually done talking. The first speech recognition model built for conversation, not transcription.

Not only that, you can get Flux free for all of October.

Conversational Speech Recognition Built for Voice Agents

As we outlined in our recent deep-dive on Conversational Speech Recognition, the voice AI industry needs models built specifically for real-time dialogue, not just transcription.

Flux is the first production CSR model.

While traditional automatic speech recognition (ASR) treats conversation like dictation, Flux was designed from the ground up to understand dialogue flow. It delivers turn-complete transcripts with context-aware turn detection, eliminating the need to reconstruct conversational flow by stitching together disparate signals from ASR, voice activity detectors (VADs) and endpointing triggers. For Voice AI developers, this means both radically simpler development, as well as best-in-class accuracy for both turn detection and speech recognition.

With Flux, you get:

- Model-integrated turn detection that understands context and conversation flow, not just silence

- Ultra-low latency when it matters most - transcripts that are ready as soon as turns end

- Nova-3 level transcription quality - high-quality speech-to-text (STT) that’s every bit as accurate as the best models, including keyterm prompting

- Radically simpler development - one API replaces complex STT+VAD+endpointing pipelines, and conversation-native events designed to make voice agent development a breeze

- Configurability for your use case - the right amount of complexity, allowing developers to achieve the desired behavior for their agent

“At Pipecat, we're deeply familiar with the turn-taking problem for voice agent developers, and Flux represents a real breakthrough in the space. The fused approach — where speech recognition and turn detection happen in the same model — solves challenges we've all been wrestling with. It's exactly what voice agent developers need right now, and we’re excited to bring Flux into the Pipecat ecosystem and see what our community builds with it.” Kwindla Hultman Kramer CEO at Daily

Watch the demo above - then try it for yourself here: Try the Flux Demo →

The Problem Every Voice Agent Developer Faces

Traditional speech recognition was built for transcripts - meeting notes, captions, recordings - where the goal is to capture everything that was said with no concern for the structure of the conversation.

For voice agents, you need more: you need to know when turns start and end so your agent knows when to listen and when to respond. So developers bolt together additional systems - VADs to detect silence, endpointing layers to guess completion, custom logic to manage state.

The problem with this approach is that existing systems treat transcription and conversation timing as separate problems. You get ASR that streams partial transcripts, VAD that guesses at silence, and endpointing layers that try to patch them together.

The result is a system that just doesn’t feel natural:

- Silence-based VAD misfires on background noise and natural pauses ("I need to... think about that"), cutting off users and destroying trust.

- Semantic endpointing runs sequentially after ASR, adding latency when speed matters most, and causing robotic delays that kill engagement.

- Partial transcripts change retroactively, forcing you to guess when text is "final enough" to act on - trading transcription quality for latency or vice versa.

This creates the impossible tradeoff every voice agent developer faces:

- Be aggressive? => Your agent cuts people off mid-sentence, destroying trust

- Be conservative? => Robotic pauses that kill engagement

But the real cost isn't just poor conversation quality - it's wasted engineering time. Developers spend months reconciling transcript streams and timing thresholds, debugging countless edge cases, and tweaking barge-in logic. Time that should go toward building intelligent agents instead goes to plumbing. You're not building features, you're debugging infrastructure.

Survey of 40 voice AI developers shows latency, turn detection errors, and complexity are the top barriers to building natural conversational agents.

Recent survey data highlights the scope of the challenge. As shown above, developers cite latency, turn detection errors, and integration complexity as their biggest blockers. And when asked about overall experience, only 2% reported being very satisfied with their voice agent conversation quality, while 61% reported noticeable to major flow issues.

"At Cloudflare, we understand what it takes to deliver real-time experiences at global scale. Deepgram has proven they can handle the performance and reliability demands that enterprise voice applications require. Flux represents the next evolution - purpose-built conversational speech recognition that eliminates the complexity and latency bottlenecks of traditional pipelines. We're excited to co-launch Flux as part of our commitment to powering the future of agentic infrastructure, giving developers the tools they need to build truly intelligent, responsive applications." Dane Knecht CTO at Cloudflare

Native Turn Detection

Most systems bolt turn-taking onto transcription. Flux takes a different approach: the same model that produces transcripts is also responsible for modeling conversational flow and turn detection.

This isn't just architectural elegance – it solves real problems:

Semantic awareness in timing. The model knows that "because…" or "uh, sorry…" isn't a complete thought, while "Thanks so much." clearly is.

Fewer false cutoffs. With complete semantic, acoustic, and full-turn context in a fused model, Flux is able to very accurately detect turn ends and avoid the premature interruptions common with traditional approaches.

No pipeline delays. When the end of a turn is detected, transcripts are already complete and accurate. No waiting for separate systems to catch up.

The result: turn predictions that are earlier when they should be and later when they need to be, with fewer false cutoffs and fewer awkward waits.

We put this to the test against silence-based VAD, semantic endpointing layers, and standalone classifiers. The results: Flux can cut agent response latency by 200–600 ms compared to pipeline approaches, while reducing false interruptions by ~30%.

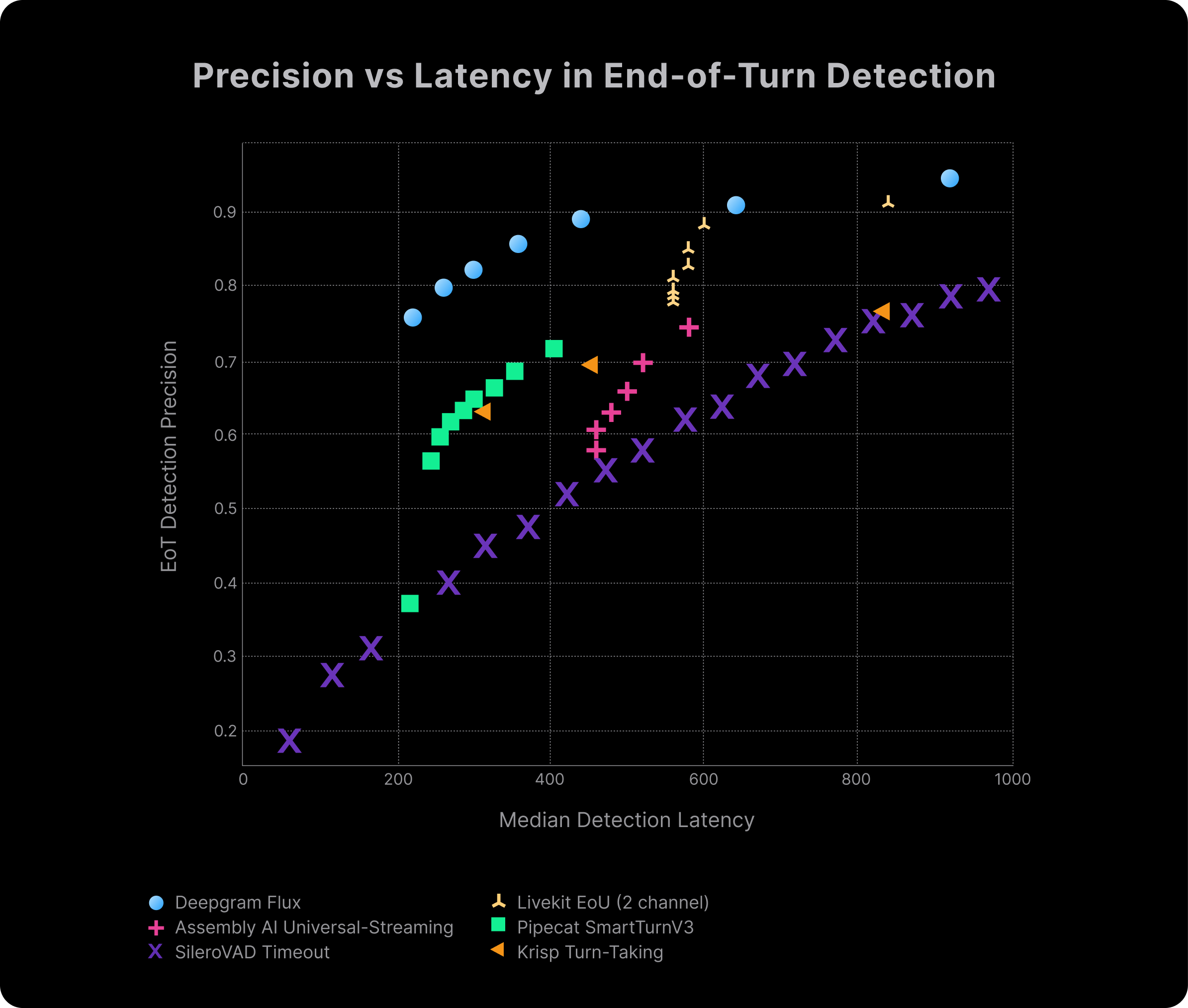

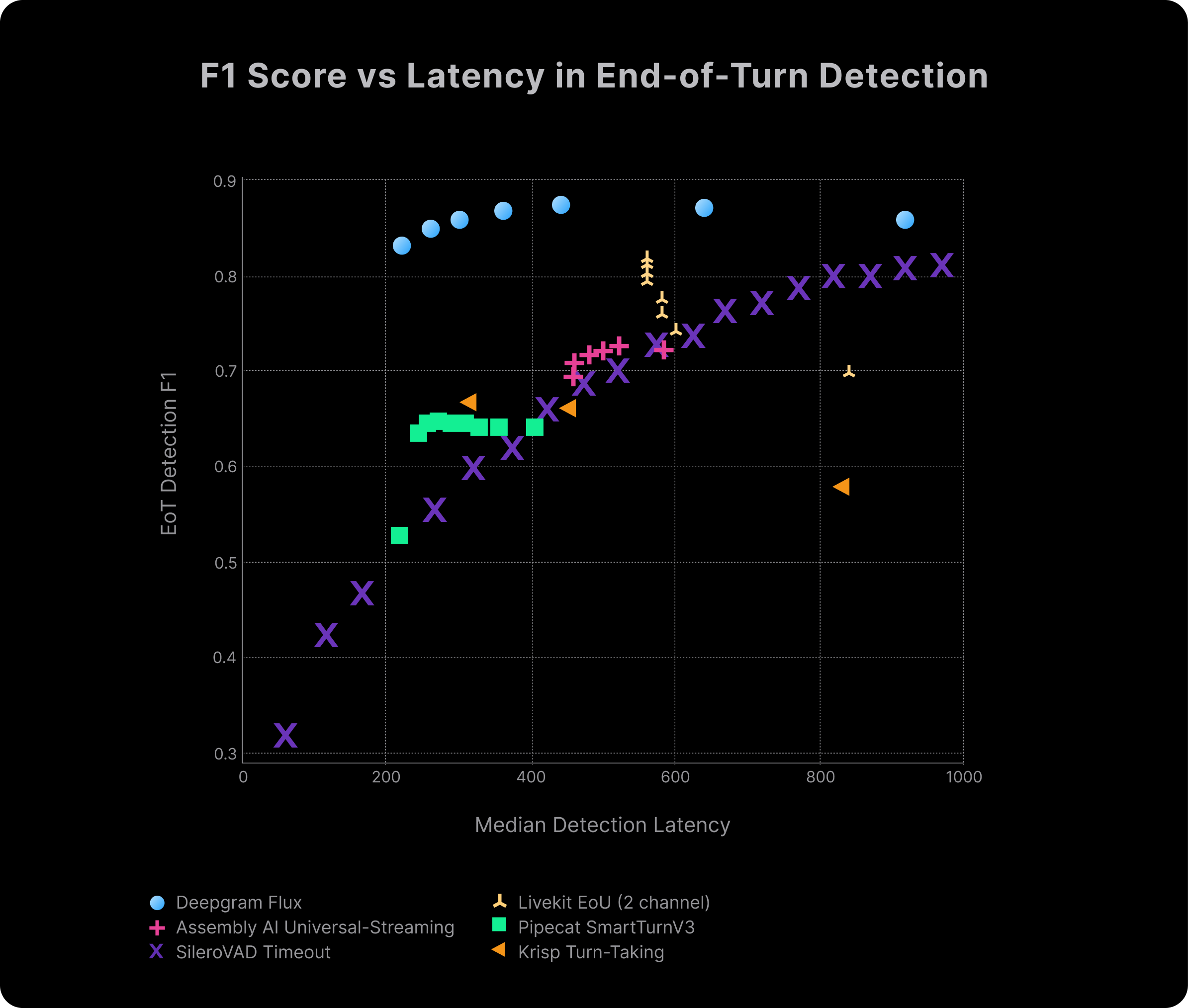

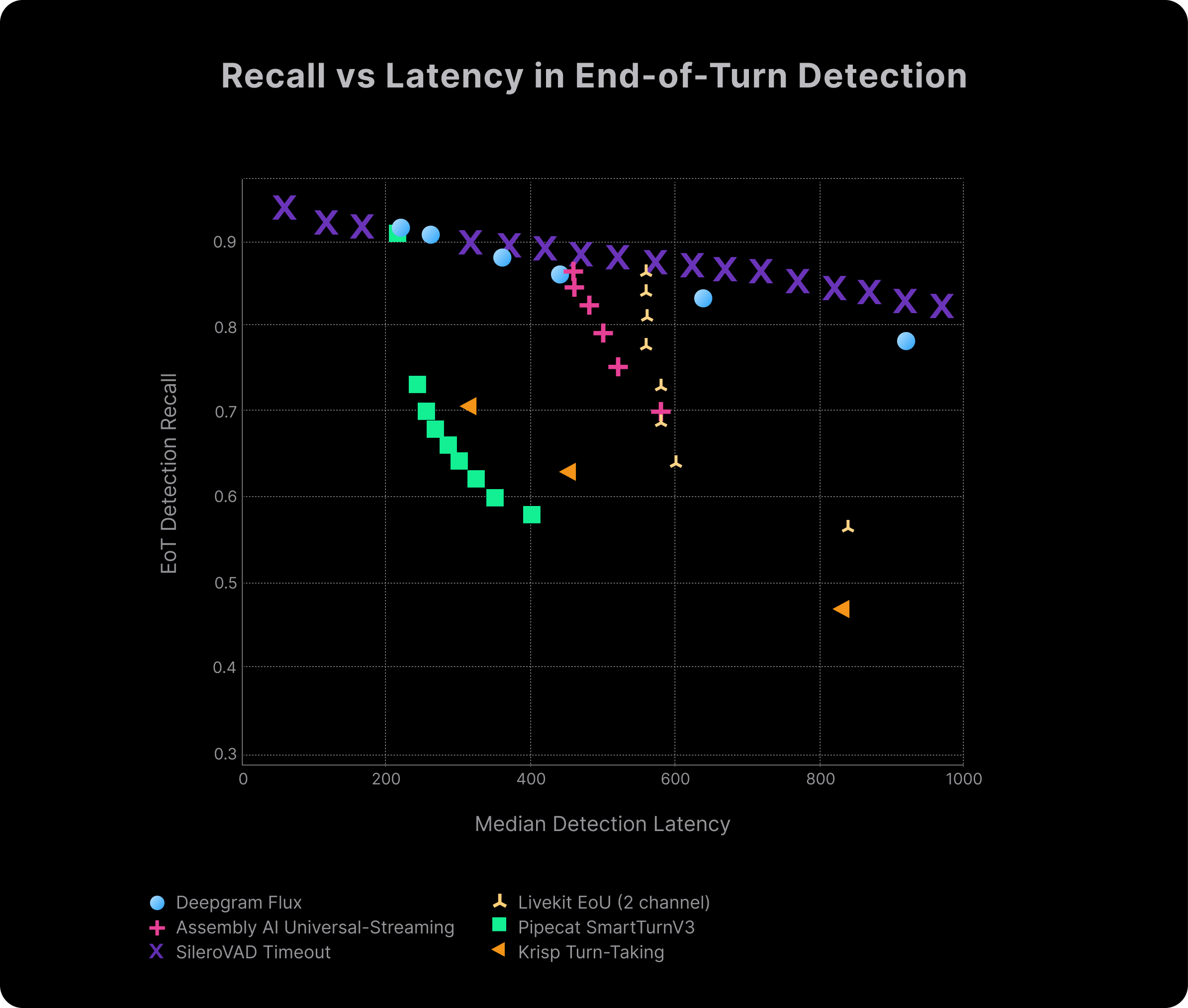

The benchmarks tell the story:

- Precision: When Flux decides a speaker is finished, it’s right far more often — avoiding premature cut-offs.

- Recall: Flux still catches almost every true end-of-turn, even at much lower latencies than other systems.

- F1: The balance of both shows why Flux feels natural: high accuracy across the board without trading speed for reliability or vice versa.

Above we’ve presented end of turn (EoT) detection precision, F1, and recall as a function of median detection latency, defined as the median audio time elapsed after the user has finished speaking in the case of a successful detection. Flux outperforms with high precision, recall, and F1 at ultra-low latency. Bespoke turn detection models used on top of ASR do improve turn detection performance, but they remain sequential and as yet cannot match Flux’s balance of speed and accuracy.

Another notable aspect is the spread of points for each model – each reflects a variety of respective configurations and demonstrates the meaningful tradeoffs in latency and accuracy that developers can make with Flux and existing alternatives. We can see that Flux achieves strong performance while also remaining highly configurable, offering developers ample freedom to control their system’s behavior.

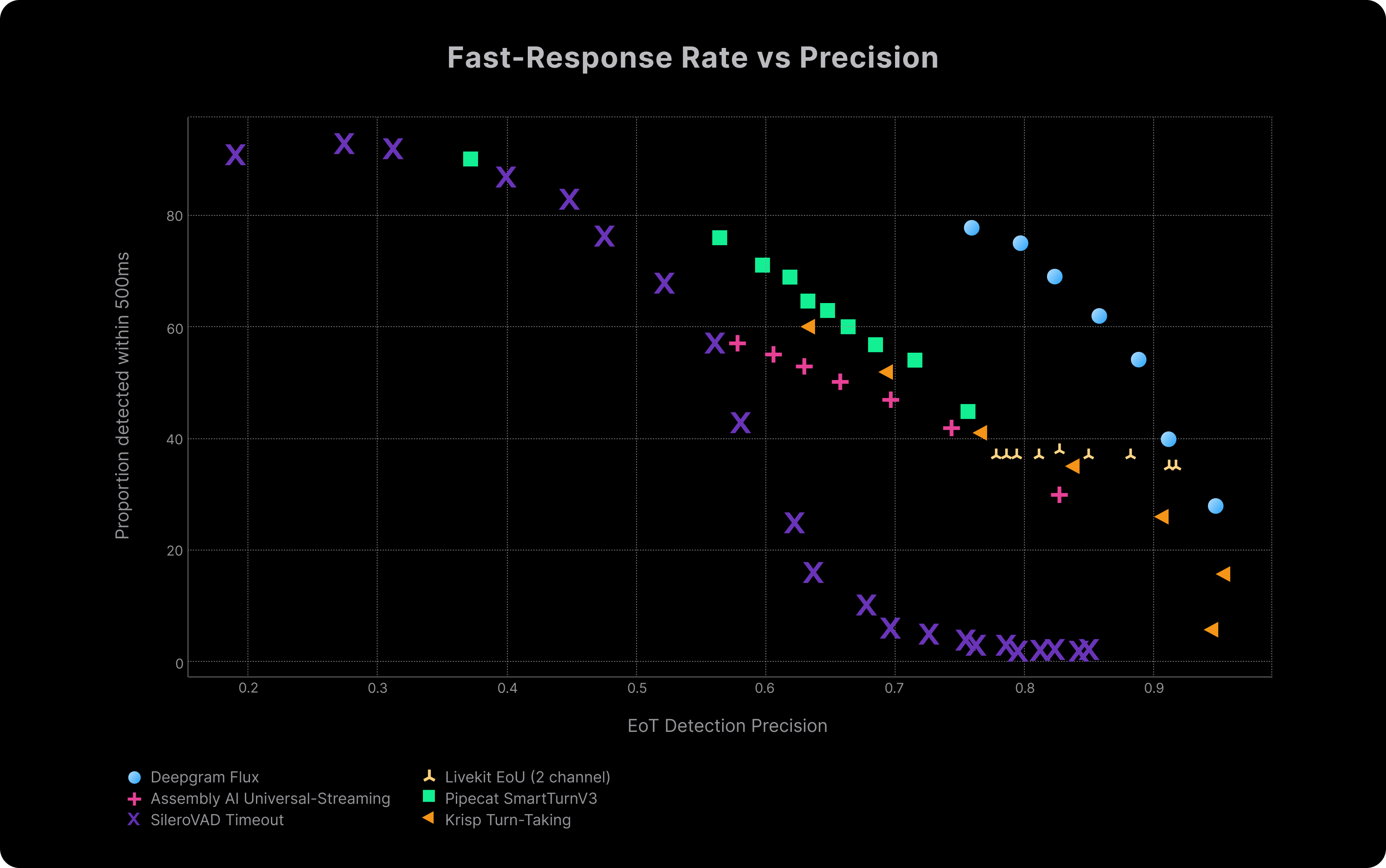

But median latency is not everything; as every Voice AI developer knows, user engagement will still lapse if, e.g., almost every other turn comes with a 5 second delay. To this end, we also critically evaluated the spread in latencies exhibited by the various models.

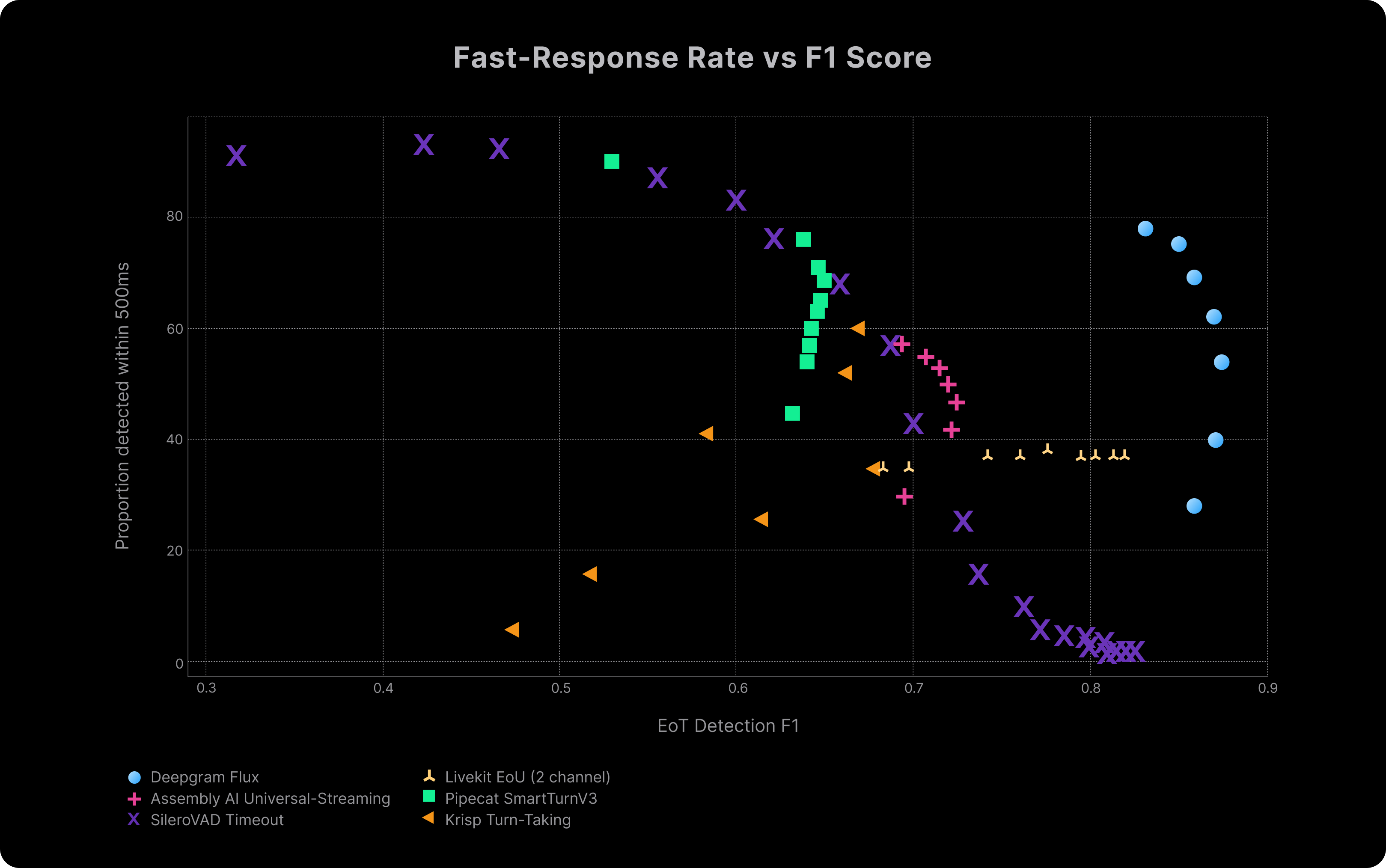

The plots above reveal why Flux feels fundamentally different in real conversations. Each shows the percentage of detections that happen within 500ms (fast enough to feel responsive) plotted against accuracy metrics. This creates three distinct performance regions: upper left (fast but inaccurate), lower right (slow but accurate), and upper right (both fast and accurate - the ideal zone). Flux dominates the upper right quadrant on both precision and F1. At the other end of the spectrum, we found that Flux typically achieves a p90 (p95) latency of 1 second (1.5 seconds) meaning Flux is fast enough to feel natural even at its slowest.

In plain terms: Flux knows when someone is truly done speaking, reacts faster, and avoids the awkward trade-off between cutting people off and leaving them hanging. Other systems force developers to choose between interrupting users or leaving robotic pauses - Flux eliminates that impossible choice.

"Flux is a really fantastic model. It completely transforms how we manage the entire transcription and turn management pipeline. The fused model with both transcription and turn detection gives not only reliable, but ridiculously fast, end of turn predictions. It even does great with one-word responses and handles uncertainty like a human would. The variability of end of turn confidence shows it's dynamically evaluating confidence with each passing chunk, which is exactly what you want." Stu Kennedy CEO at Continuata

Nova-3 Level Accuracy, Plus Keyterm Precision

Another challenge with many systems built for realtime transcription is balancing between speed and transcription quality. Generally, models that commit to outputting words as quickly as possible produce transcripts that are error prone and inconsistent. Alternatively, models may update transcripts less frequently, resulting in higher accuracy but also higher latency.

Flux uses a novel approach to streaming that allows it to achieve low latency without sacrificing accuracy. In particular, while Flux is built first and foremost for conversation, it doesn’t just outperform at turn-taking; it also delivers the same transcription quality you expect from Nova-3, our best-in-class STT model.

When evaluated against our comprehensive STT benchmark suite, Flux delivers transcription performance that matches Nova-3 Streaming while significantly outpacing competing solutions, particularly on conversational audio. On the same real-world benchmarking datasets we used to evaluate pure transcription, Flux performed nearly the same across word error rate (WER) and word recognition rate (WRR) as Nova-3. The model also maintains Nova-3's strong performance on keyterm recognition via keyterm prompting, ensuring that domain-specific terminology is captured even in a conversational setting.

Flux matches Nova-3 on transcription accuracy, delivering nearly identical WER and WRR while adding real-time conversational intelligence.

Meanwhile, on conversational audio, Flux achieved the lowest WER among the models evaluated. Importantly, this is true even across a range of turn detection thresholds. The plot below depicts WER as a function of median EoT latency. As we adjust the eot_threshold parameter to make turn detection more aggressive, Flux maintains remarkably stable transcription quality. The accompanying plot demonstrates that even when pushing toward faster detection speeds, WER remains consistently low, with only minimal quality loss at the most aggressive settings.

Flux achieves much lower word error rates than other models while also delivering faster end-of-turn detection. This shows developers do not need to trade accuracy for responsiveness.

The result: Flux gives you Nova-3-class accuracy plus conversational intelligence in one model. You don’t have to choose between enterprise-grade transcription and natural turn-taking. Flux delivers both.

“At Reppls, our AI agents must conduct live interviews in real time. Flux improved how they decide when to produce transcripts, catching the right moments to return speech far better than any other model I’ve used. The result is better timing, fewer cutoffs, and cleaner transcripts. Flux now does the heavy lifting we previously had to handle with multiple LLM requests, making conversations flow much smoother and feel more natural.” Valentine H. CTO at Reppls

Real Agent Performance: The VAQI Benchmark

In an earlier post we introduced the Voice-Agent Quality Index (VAQI) to quantify how a voice agent feels to use. VAQI blends three timing behaviors that shape conversational experience: interruptions, missed reply windows, and responsiveness.

For this evaluation, we tested on significantly harder audio: more verticals, louder background noise, denser disfluencies, and trickier pauses – the kind of customer service scenarios that stress real production systems.

Flux ranks first, delivering fewer interruptions, faster responses, and consistent behavior even on messy audio with background noise and natural disfluencies. The fused architecture eliminates the tradeoff: you don't have to choose between trust and engagement anymore.

Beyond that, Flux provides these performance benefits without sacrificing controllability over your LLM, TTS, and orchestration logic. That's exactly the optionality and performance teams building production cascaded agents need.

"Deepgram’s innovative Flux model redefines Speech-to-Text by integrating turn detection, leveraging acoustic and textual cues to accurately determine turn endings. We’re excited to support Flux on LiveKit’s agent platform, empowering voice AI developers with access to state-of-the-art models." David Zhao CTO and Co-Founder at LiveKit

Simpler Development and Integration

Building voice agents today is messy. Pipelines stack ASR, VAD, endpointing, and client-side logic, forcing developers to reconcile thresholds, timers, and cancellation rules instead of focusing on conversation. And even after stitching all that together, traditional ASR still floods you with partial transcripts, leaving you to guess when text is “final enough” to act on.

Flux eliminates this complexity entirely.

Instead of managing fragments and timing logic, Flux delivers turn-complete transcripts tied directly to conversational events. You build on coherent units of speech, not a flood of changing partials. Flux exposes two core conversation-native events:

- StartOfTurn → User begins speaking, trigger barge-in if needed

- EndOfTurn → High confidence the turn is complete, deliver response

These events come from the same fused representation that produces transcripts, so they are consistent by construction. No race conditions between separate systems, no guesswork about timing. Configuring these conversational events is straightforward; developers only need to set:

- eot_threshold: confidence level required to trigger EndOfTurn (default = 0.7).

- eot_silence_threshold_ms: fallback silence duration to force turn end (default = 5000ms)

Since EndOfTurn fires within 1.5s 95% of the time, most developers achieve their desired latency/accuracy balance using just eot_threshold.

And that’s it! Instead of bolting together multiple modules, you integrate once against Flux's conversational interface and gain natural dialogue flow immediately.

"Flux was essentially a drop-in replacement for our existing voice agent architecture and the ultra-low latency is an absolute game-changer for us. The state machine is very intuitive and cuts out complexity we were handling on our side for turn-taking and speculative response generation. We're looking forward to rolling this out to our voice agent customers as soon as possible." -Reuben Dubester ML Engineer & Cognitive Architect at Lindy

When Fast Isn’t Fast Enough

While most developers will find Flux end-of-turn detection sufficiently prompt to support natural conversation, not all voice AI workflows are the same. For more complex and expensive voice AI workflows, it is worthwhile to do certain work such as calling an LLM speculatively, i.e., in preparation for an upcoming turn end, in order to minimize response latency.

To support such workflows, Flux introduces the notion of “eager” end-of-turn detection. Developers can optionally configure an eager_eot_threshold, which introduces two new conversation events:

- EagerEndOfTurn → Medium confidence for speculative response generation

- TurnResumed → User continued talking, cancel prepared response

With eager_eot_threshold set to 0.3–0.5, you can expect EagerEndOfTurn 150–250ms earlier than EndOfTurn, at the cost of 50–70% more LLM calls. For latency-critical applications, that trade-off often makes sense. Learn more in the docs.

"At Jambonz, we know how hard it is to build great voice agents. Flux solved the fundamental problem every voice AI developer faces - knowing when someone is actually done talking. The EagerEndOfTurn feature is particularly clever - it lets agents start generating responses speculatively, which can shave hundreds of milliseconds off response time. We're excited to bring Flux into the Jambonz ecosystem and see what our community builds with it." -Dave Horton CEO at Jambonz.

What’s Next

Flux is just the beginning of our conversational AI roadmap. Here are a few areas we are already working on:

- Self-hosted support: Bringing Flux to self-hosted environments for teams that need full control over infrastructure.

- Expanded configuration options: Richer per-turn controls for context, keyterms, and end-of-turn thresholds.

- Additional language support: Extending CSR beyond English with multilingual and monolingual expansion.

- And more: Additional audio format support, word-level timestamps, additional SDK support, backchanneling identification, formatting, selective listening.

We are committed to continuously improving Flux based on developer feedback and real-world usage. In parallel, our research team will be publishing a series of technical deep dives, the Flux Chronicles, covering topics such as end-of-turn modeling, accelerated inference, keyterm boosting, and conversational state integration.

Try Flux Today

To celebrate launch, Flux is free all October, with up to 50 concurrent connections at production scale. We call it OktoberFLUX. The goal is simple: give every developer the freedom to build and scale, with no cost and plenty of headroom.

Flux is available now in the Deepgram API, with partner integrations and developer resources to get you started right away:

- Launch partners: Jambonz, Vapi, LiveKit, Pipecat, and Cloudflare

- Try the demo

- Get started with Flux

- Sign up for an API key

- Python SDK

- Join our Discord community

Whether you’re integrating Flux into your favorite voice agent platform or starting fresh, the path is straightforward: drop in Flux, wire up conversational events, and ship production-ready agents in weeks, not months.