Information Retrieval: Which LLM is best at looking things up?

If you know me, you know I love AI competitions.

A few months ago, I tested which AI Copilot is the best at coding. More recently, I reviewed the stats of AI speech recognition models to see which one reigns supreme. And now? It’s time to see which AI is the best at retrieving information for you.

It all comes from this paper from researchers at Stanford. I was one of the researchers. I’ll admit that this was simply a fun project that a couple friends and I wanted to pursue. And once we found a class that gave us the opportunity to code up this idea for some credits, we pounced at the chance.

So without further ado, let’s hop into it.

🔎 Information Retrieval: When an AI learns to look stuff up

Assistants like Siri, Alexa, Cortana, and Google Home are all powered by a few AI models underneath the hood. There’s the speech-recognition model, the natural language generation model, the text-to-speech model, and so on. However, for this article, I want to take a look at the Information Retrieval model that these assistants use to search for data for you.

See, Alexa doesn’t know what the weather forecast is off the top of her head. Neither does Siri for that matter. Rather, these AI assistants practice two very important skills whenever they talk to you:

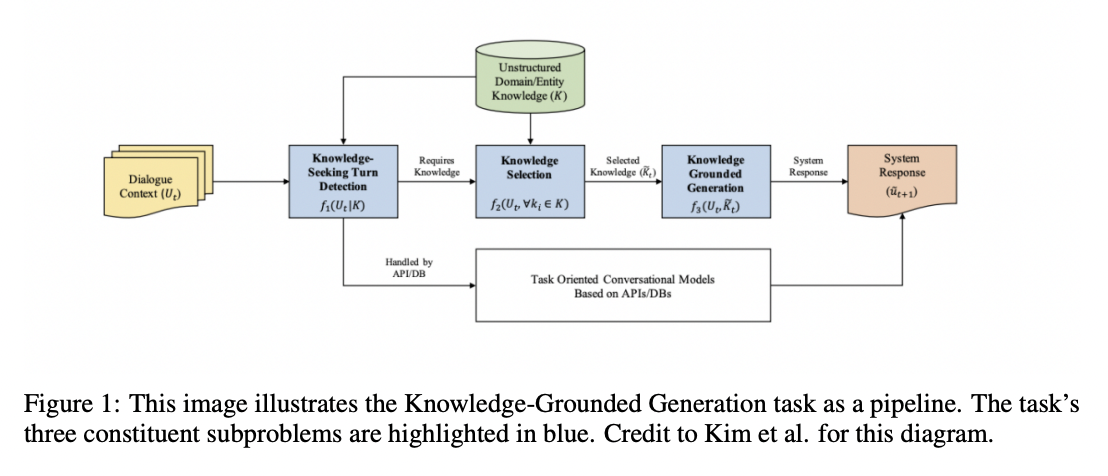

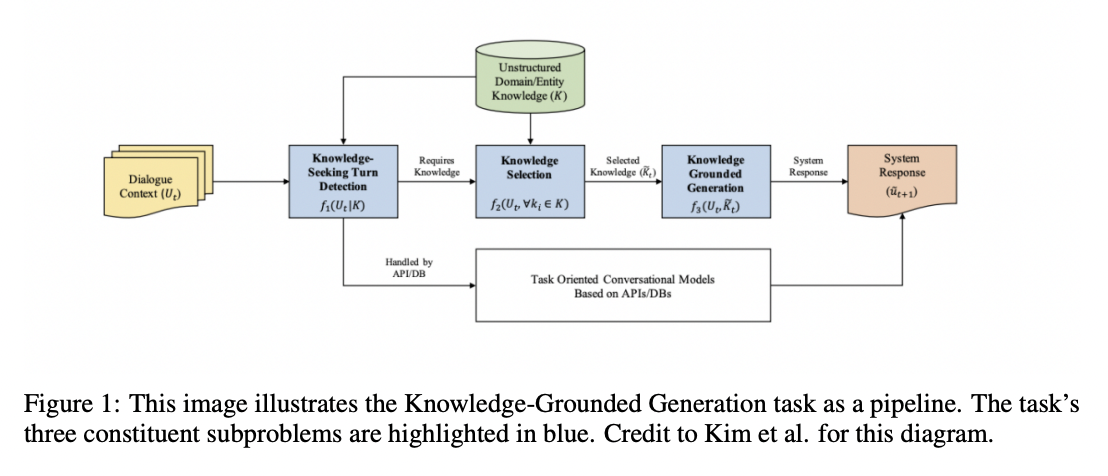

Knowledge-Seeking Turn Detection: Figuring out when it’s appropriate or necessary to look up information.

Knowledge Selection: Actually finding that information and giving it to you.

These two skills are part of a larger pipeline known as ”Knowledge-Grounded Generation,” which is a way to achieve the task of Information Retrieval. A full illustration of this pipeline can be found in the image below.

Long story short, if you ask an AI assistant a question like “Can you tell me a joke?” The AI assistant will probably just spew out a one-liner that it already has in its head. On the other hand, if you ask the assistant “What’s the weather in Austin going to be like next week?” then the AI will have to look up that information from some external database, much in the same way that you would pull out your phone and check the weather app.

See, there are some questions that the AI can answer straight away, but there are other questions that the AI will need to look up the answers for. The process of determining which questions are which is called “Knowledge-Seeking Turn Detection.”

And once the AI thinks to itself, “Yes, I indeed need to look up the answer to this question,” then it somehow has to find the correct answer within the correct database. This process is “Knowledge Selection.” In our weather example above, the machine would have to solve for the fact that (1) it has to go to a weather database (as opposed to something like Yelp or a list of your phone contacts), and (2) Find the correct answer—both w.r.t. the city and the day—within that weather database.

And while this entire process remains decently intuitive for a human to carry out, writing the code that enables a machine to do the exact same thing becomes rather complex. Nevertheless, some brilliant minds have come up with a solution. That solution involves training an AI, finetuning it, and sticking it inside the stack of a virtual assistant.

🏆 Meet our four LLM competitors…

The four AI models we decided to test were BERT, BART, RoBERTa, GPT-2, and XLNet. Each is special in its own way, but if you want some quick refreshers on what these models do and how they work, check out the videos below:

In this Short, we talk about BERT’s training process:

And in this Short, we explain what the acronym “GPT” even means:

Long story short, each of these models was trained in a unique way. As a result, they all “think” about language in their own manner as well. And the result is that some models perform much, much better than others on knowledge-based tasks—meaning that some of these AI models are better suited to be the brain of Siri and Alexa than others.

Which ones? Well, let’s jump to the results of the paper!

👓 Which AI is the best looker-upper?

🔭 Test 1: Knowledge-Seeking Turn Detection

In my opinion, the best way to explain an AI test is to have you, as a human, take the test yourself. Don’t worry, this will be quick, and you’ll probably get an A+. Here it is:

For each of the following questions, write “Yes” if you would need to look up the answer and write “No” if you can answer the question without needing any external resources.

“Hey! How are you doing today?”

“What time does McDonald’s close today?”

“Is there a pharmacy near me right now?”

“What’s your name?”

“How much wood can a woodchuck chuck if a woodchuck could chuck wood?”

Got your answers? Great!

The solution is as follows: No, Yes, Yes, No, No.

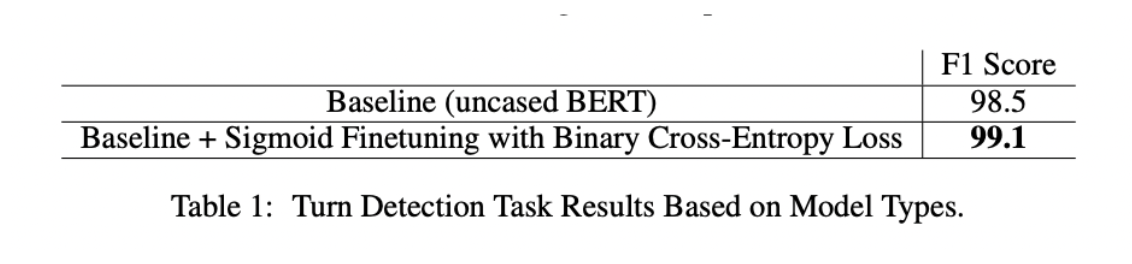

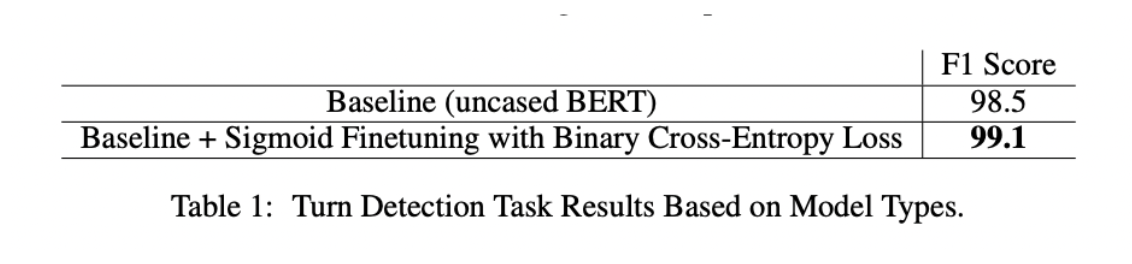

And to determine how good our LLMs are at Knowledge-Seeking Turn Detection, we threw a few thousand questions like the ones above at BERT and a finetuned version of BERT. The results are as follows:

As we can see, when taking BERT out of the box, it can answer correctly about 98.5% of the time. However, a little bit of finetuning can bump that number all the way up to 99.1%.

But that’s the easy part. What about actually looking up information? Which model is the best there?

🧠 Test 2: Knowledge Selection

Dear reader, I’m not going to put you through another test. Fret not. Just know that this test involves making a machine look up the answers to various questions from a database. To put you through the same test would be to ask something like “What’s the weather in Miami like next Wednesday” and then to have you Google it. That’s no fun.

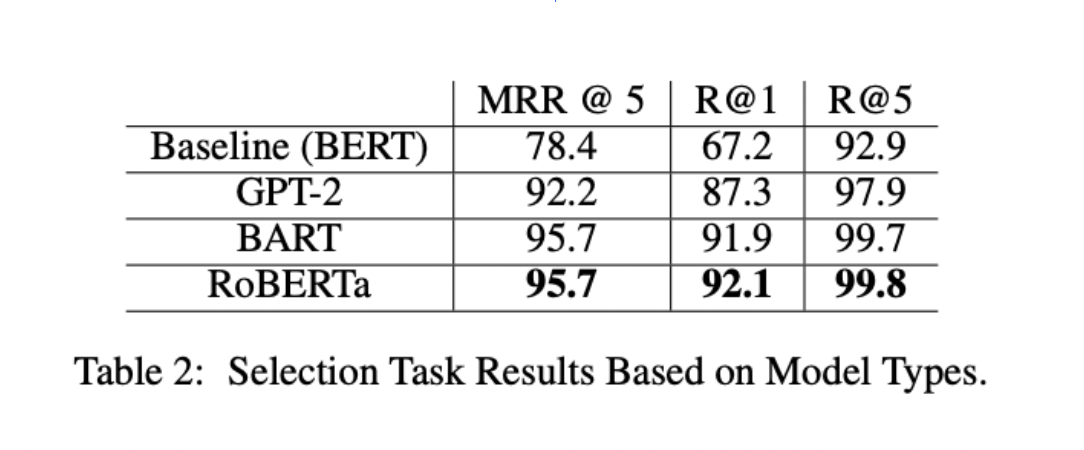

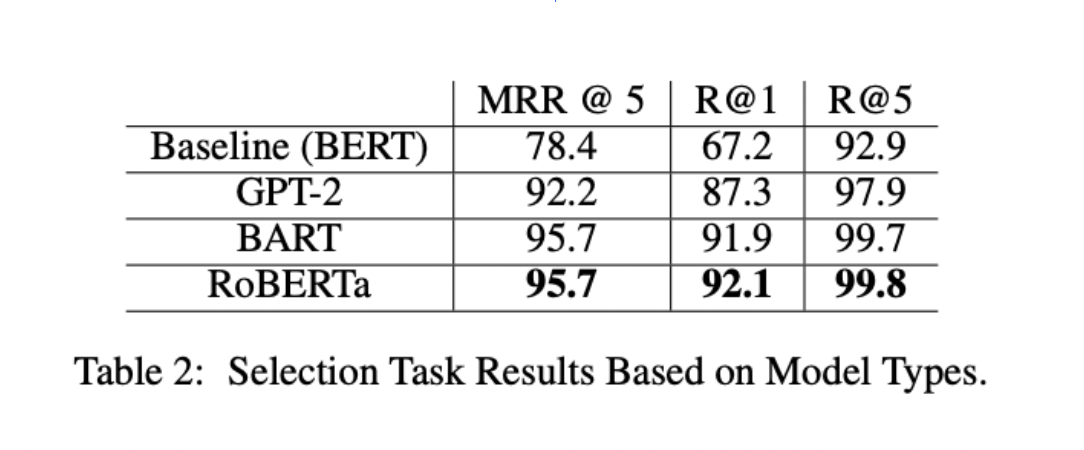

Nevertheless, we put our LLMs to the test, and here are the results:

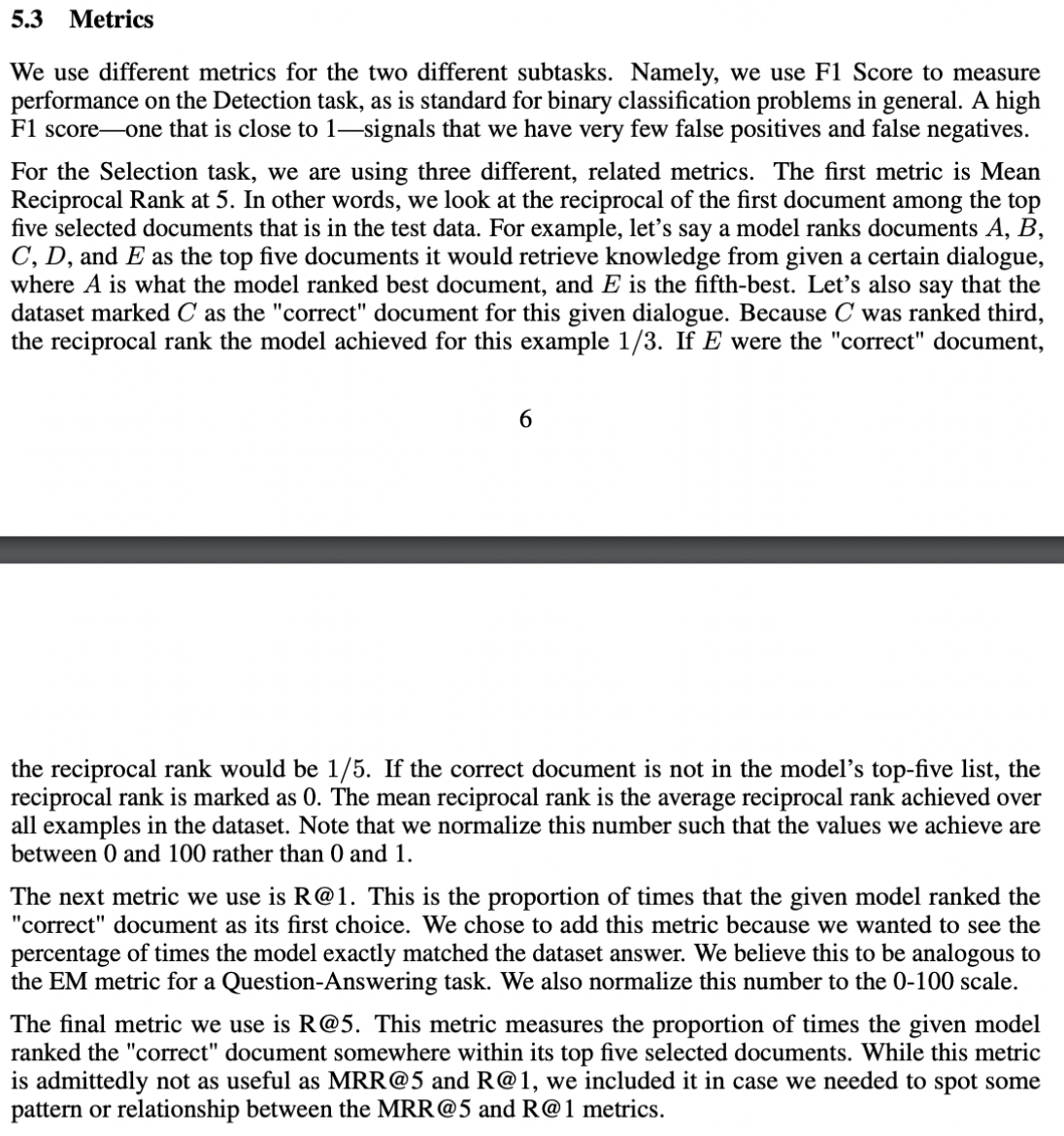

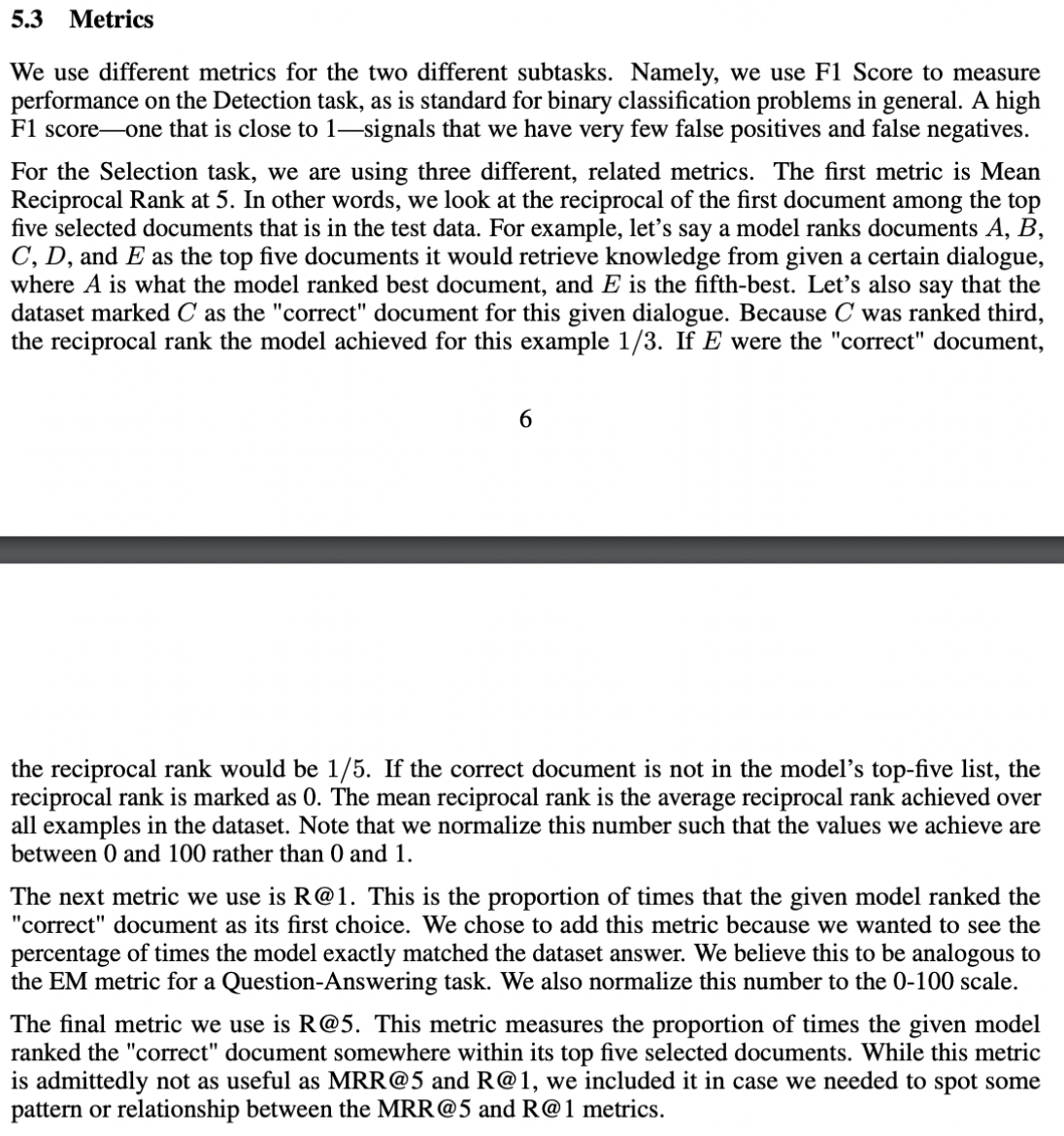

If you’d like to learn more about “MRR@5” and “R@1” and “R@5”, you can check out the paper itself, or read the screenshot below for reference.

For our purposes here though, just know that RoBERTa won. It achieved the highest score across all three metrics, with an A in all categories (and even an A+ in the R@5 metric).

🤯 Conclusions

So what have we learned today? Well, we now know that RoBERTa is incredibly skilled at retrieving information on your behalf. So if you’re building a version of Google Home or Cortana for yourself, you’d be best suited using RoBERTa as your assistant’s underlying language model—at least within the realm of information retrieval and knowledge-grounded generation.

However, that being said, my friends and I conducted this experiment nearly three years ago now (as of the publication of this article). Surely, significant improvements have been made since that time. So perhaps we should update our results. Or perhaps someone like you, dear reader, can replicate this experiment with AI models of your own choosing 😉

Note: If you like this content and would like to learn more, click here! If you want to see a completely comprehensive AI Glossary, click here.

Unlock language AI at scale with an API call.

Get conversational intelligence with transcription and understanding on the world's best speech AI platform.